The rise of China as a high-tech superpower

The rise of China as a high-tech superpower

The prospect of a booming Chinese tech sector is setting off alarm bells in Washington, DC. But what is Europe’s place in the cold war over tech?

In the early hours of a cool spring morning in Penn Valley, Pennsylvania, Temple University professor Xiaoxing Xi was awoken by someone at his front door. BANG! BANG-BANG! Forceful, intimidating – “Who knocks on people’s doors like that?” Xi thought before rushing downstairs. The Federal Bureau of Investigation, it turns out, is who knocks on people’s door like that.

Xi has since testified in Congress and in interviews as to how government agents poured into his home, handcuffed him, marshalled his wife and children out of their rooms at gunpoint and proceeded to search the family’s home in their quiet Philadelphia suburb.

It was May 2015, and the university professor had been under surveillance for months. Based on his email activity, the FBI suspected that Xi was transmitting classified details of a pocket heater – an advanced instrument used in superconductivity research – to China.

Dramatic and lifechanging, as it may be, this type of raid is now routine work for FBI agents. The bureau has officially singled out Chinese tech espionage as its top counterintelligence priority and a “grave threat to the economic well-being and democratic values of the United States”.

Intense counterintelligence efforts

Over the past decade, intense counterintelligence efforts have been afoot in Silicon Valley and at universities across the US In 2018, they culminated in the China Initiative, launched by former President Donald Trump’s Department of Justice.

The initiative, dismantled by President Joe Biden in early 2022, was a well-funded scheme devised to foil Chinese industrial espionage in cutting-edge research and business. Because, surely, Chinese spies had infiltrated these institutions to steal American tech secrets?

One thing is for sure: China’s tech ambitions are great. In the autumn of 2020, President Xi Jinping revealed China’s new five-year plan. The plan preceding it had set growth targets for a nation still climbing out of relative poverty, and in that five-year span GDP per capita grew by 30%. Millions of Chinese were lifted out of relative poverty, and some became very rich. In 2021, GDP per capita increased in 21% in a single year.

And even if the 2022 congress says little of growth, the Chinese tech sector has proven to be a formidable engine for companies like Baidu, Tencent, Alibaba, Bytedance and Xiaomi becoming to juggernauts feared even in Silicon Valley.

Wants to learn from the West

The objectives of Chinese innovation are diverse, but they are mainly focused on achieving the Chinese Communist Party’s goals for the nation: prosperity, modernisation and self-reliance.

It should come as no surprise that China wants to learn from the West. The Chinese government is actively working to counteract the brain-drain of Chinese researchers and engineers who are relocating to the US. They have attractive programs in place to encourage repatriation, and Chinese law stipulates that every citizen must co-operate if the authorities ask for assistance – or even trade secrets.

These laws are at the heart of the concerns over Chinese intellectual property (IP) theft. Over the past couple of years, these fears have led to several large Chinese tech companies being sanctioned – and crippled – by the US. Among them, the mobile communications companies ZTE and Huawei.

Chinese authorities invest heavily in key areas and set long-term targets for private and public sector innovation.

Another major difference in innovation strategies is the way Chinese authorities invest heavily in key areas and set long-term targets for private and public sector innovation. They have an ambitious program for conquering space, of course, but there are more strategic endeavours where China hopes to become world leaders. The key fields of strategic importance are transistor technology, quantum computing, superconductors, weapons technology, artificial intelligence and any technology – such as social media and 5G infrastructure – that expands its surveillance capabilities.

Lagging behind

Transistor technology, which is found in the advanced factories in neighbouring Taiwan, is a priority because this underpins all digital technologies. China is currently lagging a few generations behind the state-of-the-art in this field and some western think-tanks argue that maintaining China’s dependence on other countries for advanced chips is crucial.

Quantum computing research is a race where the state that first manage to harness the technology will achieve the capability to decrypt communication today thought to be secure, along with many other exciting applications. The government lists quantum technology as the second priority, after artificial intelligence. It should be noted that this research is still embryonic and by no means a quick fix for China’s chip-making problems.

Superconductors promise to revolutionise our use of electricity as they provide zero-resistance transmission of electricity. China is slowly catching up to the UK and US in this nascent and investment-demanding domain. They are already leaders in the adjacent field of solid-state batteries which, among other things, can increase the range of electric vehicles and drones.

A constant race

Weapons technology is a constant race to stay ahead of the curve, to ensure adequate deterrence against potential attacks. Currently the name of the game is drone tech, battlefield AI and cyber-warfare – all disciplines where Chinese tech is at the bleeding edge.

Social media, payments systems and communications infrastructure are examples of technologies that facilitate mass-scale surveillance. Currently the Five Eyes pact (US, UK, Canada, Australia and New Zealand) is leading this field. However, China has invested heavily in domestic surveillance, including a vast network of CCTV cameras and an equally impressive network of human informants. Recent controversies over TikTok, Huawei 5G and cell phone brands like ZTE and Huawei are indicative of western fears that ubiquitous Chinese tech exports may propel its authorities’ surveillance powers onto the global stage.

While competition is fierce in these fields and beyond, artificial intelligence has emerged as the most hotly contested battleground. State-of-the-art AI – and in a possible future, artificial general intelligence, which is human-level AI and beyond – has the potential to turbocharge all other research.

Much has been made of the vast troves of data that Chinese companies could mine from the nation’s almost one billion internet users. This dataset could be the key to China surpassing the AI efforts of other nations. In a recent report, the Future Today Institute warns that Chinese companies such as Tencent and Baidu have superpowers, thanks to their access to this data “without the privacy and security restrictions common in much of the rest of the world”.

However, the recently enacted Personal Information Protection Law (PIPL) mirrors Europe’s GDPR, affording Chinese users many of the same protections as EU citizens. The communist party has further proven willing to play hardball with its most profitable companies, imposing some of the highest fines ever on its own tech juggernauts. Companies in violation of PIPL may find themselves facing fines of up to five percent of their annual revenue.

In other words, the national treasure of Chinese data is not free for companies like Tencent and Baidu to mine at their will. That level of power is reserved for the Chinese state itself.

State surveillance is culturally ingrained, a fact of life since the cultural revolution and even long before.

While China’s tech ambitions are a boon to many Chinese, who have seen technology add comfort and convenience to their lives, technology always has the potential to be used for both good and bad. Surveillance is pervasive in China, with a vast network of CCTV cameras surveilling public spaces, and an immense network of human informants keeping track of neighbourhoods throughout the country.

State surveillance is culturally ingrained, a fact of life since the cultural revolution and even long before. China’s controversial social credit system has precursors that date as far back as the third century. Many Chinese seem to accept and even welcome this type of surveillance.

But there is a high cost for minorities such as the Uyghurs in the Xinjiang province, who are systematically targeted, suspected of terrorist affiliations due to their ethnicity alone, and sent to re-education camps if found to be engaging in any sort of behaviour deemed suspicious by authorities.

These human rights concerns make China’s technological rise seem ominous, and they have been rightly criticised by human rights groups and democratic countries in the west. It is ironic then that the United States is likewise using its tech prowess to monitor and target ethnic minorities, like Xiaoxing Xi.

FBI lacked expertise

After the FBI raided Xi’s home, the Temple University professor was suspended from his job and he faced the prospect of spending the rest of his life in prison.

Then, after four months, all charges against him were suddenly dropped. Xi’s colleagues had convinced the Department of Justice that the schematics he had emailed to China were, in fact, detailing a widely published innovation of his own, which had nothing to do with pocket heater technology. The FBI simply lacked the scientific expertise to understand it.

For Xi, the damage was already done. Not only was his reputation shattered, the suspicion of treason hung over him like a dark cloud. He had lost his sense of belonging and security in his home country, as a naturalised citizen of the United States.

Xi’s case is far from unique. The US finds itself in a predicament in which its companies need Chinese talent to stay competitive, but the US government fears the leaking of trade secrets and intellectual property to the rival nation.

In the past decade, US authorities have targeted hundreds of academics of Chinese descent – many of them American citizens – on suspicion of possible espionage. A few cases have been tried in court. There have been convictions, mostly for the common (but illegal) practice of trying to enrich oneself by transferring intellectual property from a previous employer to a new one.

Not one case has resulted in a conviction for espionage.

As the relationship between China and the US shows no sign of thawing, European countries must decide what role they want to play in this cold war over tech supremacy. China and the US have shown that they are both willing to play dirty to win this race. European countries will have to forge their own path, or risk ending up as collateral damage.

Sam Sundberg

Freelance writer, Svenska Dagbladet

Future trends for regulating the digital economy

Future trends for regulating the digital economy

Today we live in a very different world than we did just a few years ago. Everything has changed: the geopolitical landscape, the energy market and the cost of living. What has also changed is the view on regulating the Internet.

Some years ago, most politicians in the Nordics believed that the Internet should be free of rules and that a liberal regime was the only true guardian of innovation. Tech companies should not be liable for the content on their platforms and big tech should be able to grow by acquiring start-ups.

The pendulum has now swung to the other side, and in 2022, we see overwhelming support from Nordic decision-makers for the EU landmark regulations over the digital landscape, namely the Digital Services Act (DSA) and the Digital Markets Act (DMA).

Both regulations were adopted by the EU in summer 2022, and they will become reality by early 2024, at the latest. These instruments will have a huge impact on the way platforms deal with liability for illegal content, as well as how big tech companies should deal with their business users. The guiding principles for the new regulations are focused on creating fair and transparent rules that level the competition in the market and protect users and consumers from unfair commercial practices.

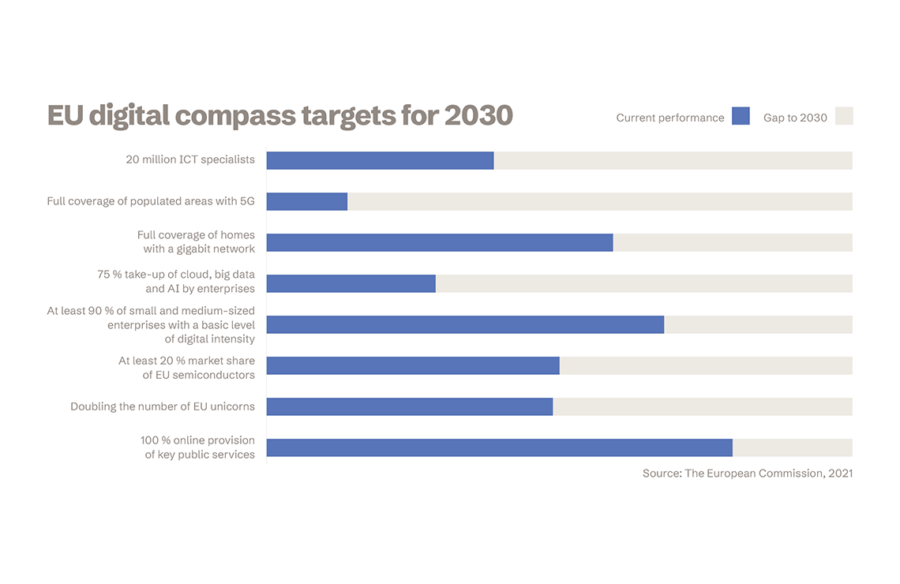

The current EU Commission is now halfway through its mandate and has already achieved a lot by means of proposing new digital regulation and becoming a force against the big tech companies. The Commission has set up several goals for 2030 related to increasing tech talent and the number of European unicorns, but they still have a long way to go to meet their goals.

But while the EU wants to boost the European digital economy, it also wants to create a safe internet based on European values. This is a tricky balancing act between those who want liberal rules that allow for innovation and increased global competition, and those who want heavy regulation that protects the consumers of digital services.

The EU’s objective can be summarised in two words: values and sovereignty. Or in the words of Commission president Ursula Von der Leyen: “Digital sovereignty is not just an economic concept. We are a Union of values. One of the great questions is: How can we preserve and promote our values in a digitised world?”

Recent global events have undoubtedly strengthened the belief among EU policymakers that the EU needs to be its own strong force and promote a distinct ‘third way’ of regulating the digital economy, somewhere in between USA’s ‘laissez-faire’ approach and Chinese authoritarianism.

This will – and has already – led to more regulatory oversight and enforcement powers at the EU level, making Brussels an increasingly important hub of tech regulation. This centralisation will make the European Commission a bigger interventionist player in the digital economy, not only by proposing new legislation but also by monitoring and ensuring compliance, which will take responsibility away from national authorities and make the rules even more harmonised across the EU.

Based on this new landscape, there are four major regulatory trends that will set the stage for the coming Internet rule book.

Safeguarding EU values

This EU Commission is much more political and principals-based than previous Commissions. It has taken the fight with the big tech, at the urging of France, but it is also taking a political stance against countries such as Poland and Hungary that are an increasing source of irritation to the Commission and other Member States with their populistic and nationalistic agenda.

As part of this battle, the Commission wants to protect the European value of freedom of expression, enshrined in the EU Charter of Fundamental Rights, by introducing a proposal that aims to protect the safety of journalists and the independence of newsrooms from any external influence, such as states, owners and platforms.

This proposal will be much debated, as regulating the media is a sensitive issue that typically is left at the national level. The fact that the EU Commission is considering a proposal to regulate media on EU level shows that it will do anything to protect the values that it holds dear. But it will be an uphill battle to defend this proposal against member states and media companies that oppose any regulation of freedom of expression beyond the national level.

Protecting consumers, minors and privacy

One of the most discussed and contested issues in digital regulation is the use of personal data for targeted advertising. Many EU decision-makers are frustrated with the slow and inefficient enforcement of the General Data Protection Regulation (GDPR), and the need for a targeted revision of the rules is under discussion. The DSA and the DMA have already included specific rules to ban targeted advertising directed towards minors and stricter consent rules for digital gatekeepers, but there is a clear political push to do more to protect privacy.

We also see a continued push from EU institutions towards greater transparency regarding the use of algorithms, with the aim to ensure that people are empowered and informed about them. And similarly, we expect some moves to regulate the use of so-called ‘dark patterns’ and platform designs that are perceived to manipulate and steer user choice and inhibit freedom of choice online.

Fostering EU innovation

The European Commission will propose a series of legislative measures to strengthen the EU’s position as a hub for emerging technology innovation. This is expected to include a review of the regulatory definitions of ‘start-ups’ and ‘scale-ups’ in 2023, intended to promote the emergence and success of EU technology companies. On the one hand, these measures are an attempt to make it easier to create new digital services, through a common EU digital identity and a review of EU competition policy, ensuring that competition instruments (merger control, market definitions and State aid control) are fit-for-purpose in a fair and balanced digital market. On the other hand, the EU will also look at regulating new technologies.

One example is the AI Act that is currently under discussion, in which the EU aims to regulate a technology that is still developing. The objective is to scrutinise certain AI technologies that can be seen as high-risk, such as facial recognition. But some are also of the opinion that AI used in newsrooms, such as bots and computer-created images, should be seen as high-risk AI, as the content may not be trustworthy and therefore in need of heavy regulation.

Promoting a circular economy

The EU’s ambition to be climate-neutral by 2050 remains the guiding policy for the green transition, which has also impacted digital regulation. The EU Commission has adopted a package of proposals for the circular economy, empowering consumers in the green transition and making sustainable products the norm.

A key proposal on Ecodesign for Sustainable Products Regulation (ESPR) introduces (among other things) a digital product passport for new products, as well as certain requirements for online marketplaces.

The EU will also propose a regulation on Right to Repair this autumn that will require products to be repairable and, as a result, prolong the average product lifetime.

At the same time, there is enormous political pressure to increase product liability of online marketplaces, which could include liability for green claims of products.

Schibsted’s voice

Schibsted is a leading voice and will actively contribute to a regulatory landscape that allows for the innovation of state-of-the art digital products and services in the Nordic market. We will focus on calling for effective implementation and enforcement of EU regulations, such as the DSA and the DMA, and ensuring balanced regulation for online marketplaces, as well as the continued ability to use data to create of relevant products and services for our users. We will defend editorial independence from external influence and the Nordic self-regulatory system in the media. And we will support efforts to promote sustainable products and circular consumption in Europe.

Petra Wikström

Director of Public Policy

Years in Schibsted: 4

Influencers might need new skills to survive

Influencers might need new skills to survive

Social media is fundamentally changing. Algorithms focusing on our interests will make us more passive, and influencers are in for a challenge.

We have now entered the third era of social media algorithms. This new development has major implications for some of the tech world’s leading players and for our personal well-being, and is one of the trends that will affect us most in the coming years.

Time is money

The first era of algorithms was simple by today’s standards. We as users decided for ourselves what interested us and which accounts we would follow. Then posts from those accounts began appearing in our feeds in chronological order.

During the second era they were shuffled up so that posts from accounts we already followed were mixed with posts our friends commented on and with accounts that resembled the ones we already followed.

Now we have entered the third era, where we don’t even need to tell social media what we’re interested in. It doesn’t matter which accounts we follow. Recommendation algorithms are now becoming so accurate that they always give us what we want without us having to actively tell them what that is.

Just like before, it’s all about consuming as much user time as possible. Time is money or, to put it more precisely, the more companies can hold our attention, the more advertising they can sell. They earn more money – and therefore more value – for their shareholders.

This era also goes to show that we ourselves don’t know what we want. The companies can figure that out for themselves and then get us to spend our valuable time on them.

The principle isn’t new, but the amount of money being invested in developing it is. And it’s TikTok that’s leading the way. Its parent company Bytedance spent SEK 163 billion on research and development in 2021 alone. Developing a market-leading algorithm is expensive.

Algorithm development is also transforming how we use social media. Previously, users would interact with friends and acquaintances and share their everyday life with them through photos and status updates. The apps served as extensions of our social lives.

Now the focus has been shifted to entertainment. Here, too, TikTok is the one driving the change and is sitting in the driver’s seat. On its platform, it’s not who you follow that determines what content you view, but rather the type of content you like. The social function has been peeled away. And its competitors are following suit.

Video is the new gold

For the social media giants, video is the new gold. Instagram and Facebook are fighting to get Reels, their TikTok clone, to take off. YouTube is investing heavily in the very similar Shorts format. It’s about reversing a trend where, for example, Instagram and Facebook owner Meta is seeing its first ever decline in user growth and revenues.

This trend is also redrawing the map for influencers who enjoyed huge success and earned large amounts of money on those platforms. For many years now they could sit back and enjoy growing audiences and engagement, which guarantees collaborating advertisers a certain amount of exposure. But what happens when followers cease to be so important?

The fact that TikTok’s algorithm is based on interest rather than audience size means that anyone can go viral. This summer Instagram tried to roll out similar changes in its algorithm but faced fierce pushback from the most established influencers, from Kim Kardashian to Swedish Rebecca Stella. In SvD, one influencer described the new reality on the platform as “Russian roulette”.

Instagram had to admit they were wrong and withdrew it, but it’s probably only a matter of time before the new algorithm returns. Instagram simply can’t afford not to keep up with users’ changing behaviour, and has declared Reels as the future for the platform.

Influencers need to start over

For many of the leading influencers around the world, this means they will have to start over, learning new tricks and understanding user behaviour on a new platform. Those who built careers on generating engagement by posting nice pictures will suddenly have to learn how to make videos and create a different type of content. Not everyone will survive the transition,

And perhaps that’s the natural process of succession; after all, it’s normal in most industries for new skills to emerge and for old ones to die out, and for companies to change their strategies.

So how are the platforms’ new, advanced recommendation algorithms affecting us users? We’re becoming less active and more passive. We’re using the platforms less and less for keeping in touch with friends and acquaintances. And instead we passively scroll through infinite feeds over which we have no control. One aspect of it is how it makes us feel.

Research on psychological well-being and social media use is still in its infancy, and it’s very difficult to say anything about cause and effect, but there are some indications – and they’re pointing in the same direction. A study conducted in the United States found that individuals who passively consume social media content run a 33% higher risk of developing symptoms of depression, while the same risk for active users is 15%. A study conducted in Iceland on more than 10,000 adolescents found that passive consumption correlated negatively with anxiety and symptoms of depression. The same correlation was not found in active users, even after controlling for other factors.

As already mentioned, the relationship between cause and effect is not easy to establish, but we can be pretty certain that development of the algorithms has more to do with enriching the social media giants’ shareholders than it has with making life better for us users.

Sophia Sinclair

Tech Reporter SvD Näringsliv

Years in Schibsted: 4

Henning Eklund

Tech Reporter SvD Näringsliv

Years in Schibsted: 2

“Algorithms can encourage empathy and connections”

Victor Galaz is deputy director and associate professor at Stockholm Resilience Center, and a writer for Svenska Dagbladet.

“Algorithms can encourage empathy and connections”

Could AI make us care about the climate? Or will it just bring a flood of auto-generated disinformation? It’s Victor Galaz’s job to find out.

Next year, Routledge will publish Victor Galaz’s book Dark Machines, an essay on the impact of artificial intelligence in a future of climate change. As deputy director and associate professor at Stockholm Resilience Center (and a writer for Svenska Dagbladet), he spends a lot of time pondering resilience and sustainability. Over Zoom, from his home in Stockholm, he explains what makes for a resilient society.

“It’s a society with the capacity to predict, adapt to and recover from shocks. In that process, it also innovates and renews itself. For instance, the war in Ukraine and the pandemic pose huge challenges for global food systems, energy systems and so on. However, we shouldn’t strive to get back to normal from this point, because we need to change these things anyway. Our societies need to evolve.”

How resilient is our society?

“Different societies have different levels of resilience. A country with weak public institutions and little money is always more vulnerable than a country like Sweden. However, one difference between the world today and the world twenty years ago is that we’re much more global and interlinked. A disturbance in one part of the world rapidly spreads to other parts.”

Do we have the resilience needed for future challenges?

“We can never take that for granted. Climate change and loss of biological diversity pose massive challenges. Over time, our drive to optimise and maximise has created huge values for a lot of people. But we have never lived in a time of climate change like this one, and we simply don’t know yet if we can handle it.”

In his upcoming book, Victor Galaz explores how AI is cause for both hope and concern among climate scientists. He talks of a “silent tsunami” of AI seeping into

all aspects of our society – more or less unnoticed.

Is AI a threat in itself or is it a matter of who controls it?

“Technologies are not neutral. Some AI systems are explicitly designed to harm us, for instance through surveillance and discrimination of ethnic minorities. That said, it is a matter of control and of fair distribution of the enormous gains these new technologies bring.”

Regulating new technologies is a notoriously tough task. As the British academic and writer David Collingridge once pointed out: “When change is easy, the need for it cannot be foreseen; when the need for change is apparent, change has become expensive, difficult and time consuming.”

The challenge, then, is foreseeing the future. If we fail, AI will bring unintended and unwanted consequences, according to Victor Galaz.

”There are some direct climate effects of AI, such as energy costs, social costs and environmental impacts. We are coming to terms with these. But then there are indirect, long-term effects that are even bigger, and much harder to manage. Take digitalisation of agriculture, for instance. As we use technologies to optimise and maximise food production, we get enormous monocultures, as these are the most efficient, and we see the end of small-scale farming, loss of local job opportunities and more vulnerable ecosystems. And these are just some examples. Another is mass-scale climate disinformation through social media bots.”

If we do solve the problem of control, how can AI contribute to a resilient society?

“In two ways. Firstly, it will give us a better understanding of how our planet is changing, and how dependent we are on it. Secondly, it could help expand our empathy with other people and even with other species. Just as algorithms can exploit negative emotions to drive engagement in social media, they can encourage empathy and connection.”

“These and other emotions are important to bring about change. Just look at the mass appeal of Greta Thunberg. She is sad. She is disappointed. She is angry. These emotions make people care.”

Sam Sundberg

Freelance writer, Svenska Dagbladet

Campanyon makes nature accessible to everyone

Campanyon makes nature accessible to everyone

The way we travel is changing. During the pandemic the few opportunities left for travel were local and in nature, away from crowds. With tourism now back in full swing, the industry is signalling that this trend is here to stay. And Norwegian start-up Campanyon is at the forefront of it.

With over 10,000 bookable stays across more than 20 countries, the online booking platform Campanyon has already established itself as the leading platform for outdoor stays across the Nordics – only a year after launch. It’s now aiming to strengthen its position across Europe.

Talk to any entrepreneur and they’ll tell you that timing is critical in terms of both when to launch and to succeed with a new business. The same held true for Kristian Qwist Adolphsen and Alexander Raknes, the two founders of Campanyon, when they decided to explore Campanyon as a new business idea in spring 2020.

A passion for sports and the outdoors

The two originally met while studying at Copenhagen Business School, where they quickly became friends due to their shared passion for entrepreneurship, sports and the outdoors.They ended up working together at the digital marketing agency Precis Digital, and eventually, they both joined Google. It was there that the first ideas around Campanyon were formed.

After being sent home from the Google offices shortly after the Covid pandemic hit, the pair spotted some new and interesting trends emerging across various industries, as a direct result of the lockdown. One of the trends that captured their attention was the increasing appetite for being in nature, as people were longing to escape isolation but were banned from travelling abroad. This resulted in new records for nature-focused and camping-related search terms and overnight stays.

Alexander and Kristian decided to do more research on this budding market and quickly realised it was extremely difficult to both find and book places in nature in a seamless way, mainly due to it being a very fragmented market consisting of small platforms with limited supply. At the same time, they couldn’t find any platform in the Nordics that attempted to unlock unused private land for campers to book and stay.

“It was very clear from early on that the market and appetite for local, authentic, and nature-focused stays was growing. At the same time, there were very few established players offering user-friendly solutions – which we found interesting,” Kristian says.

Being an avid skier, surfer and mountaineer, Alexander could relate to the trend they were observing.

“I, too, had been longing for cheaper and more sustainable options to spend the night in nature, get local tips and meet like-minded people.”

Teamed up with former colleagues

Those insights led to the early-start of Campanyon, which began during late spring of 2020. A few months later, the two teamed up with former colleagues Aline Nieuwlaat, Sven Röder and Werner Huber, who all are very experienced with product engineering and UX design, and they quickly became Campanyon’s co-founders, too.

Aline was just wrapping up her work on a food app when Alex called her to let her know about the idea for Campanyon, something that immediately resonated with Aline.

“I’m a passionate camper so when Alex called, I was instantly committed to join the journey! Just before that I saw an ad from another player in the market and thought to myself how smart the idea was to offer private land to campers.”

Funnily enough, the five co-founders are based in five different countries. The first time they met in person after they started working on Campanyon was in December 2021 – the day they signed the deal with Schibsted Ventures in Oslo and around one-and-a-half years after they began working together on Campanyon.

Being born out of Covid and having a fully remote setup from day one, the team knew this would come with both opportunities and challenges. They have been fortunate to learn from leading companies, such as Google, on how to approach and adapt to working remotely and they have introduced some of the things that worked well directly into Campanyon, while skipping the things that weren’t quite as efficient.

In the early days, it was clear that too many initiatives were being launched all at once, to make everyone in the organisation comfortable with the new setting of working remotely. This meant almost daily check-in meetings, coffee huddles, shared lunch breaks and other attempts at creating a shared working experience – which to some extent had the opposite effect.

The tech team is the perfect example of Campanyon’s effective teamwork. For Sven, hiring and scaling has been a fantastic challenge and opportunity as the CTO. His team consists of a healthy mix of employees and freelancers from all over Europe.

“We have some incredible talent on board that is motivated to work in an ‘always on’ start-up environment. Open communication and cloud tools that support our development flow allow for rapid iterations of UI/UX and continuous updates of our services.”

Crucial to have local people on the ground

Campanyon has people working from nine different countries now, and nowhere is that more palpable than in the sales team. Kristian sees it as crucial to their success.

“Having local people on the ground across our key markets has been instrumental in growing both supply and demand. The local presence gives us the opportunity to establish relationships with key stakeholders and offer customer service at a different level, something that is particularly important in the Southern European markets we operate in.”

Campanyon got off to a great start since its launch in 2021. Or as Kristian puts it, they’ve been extremely busy growing since the launch.

“Since we launched the platform last year in April, we have grown from around 100 host listings in Denmark and Norway to more than 3,000 host listings across more than 20 markets.”

Alexander, who embodies the companionship that is core to the company’s ethos, visits many of the newly onboarded hosts to get feedback and foster a sense of community.

“I’ve already met a lot of campers and great hosts in unique places, and all of whom have stories that I want more people to hear.”

Campanyon experienced a huge appetite for joining the platform early on and they have used various channels to create awareness and grow the number of hosts in efficient ways.

“We have also seen a large number of organic signups from hosts in locations we don’t actively target, which is really funny and also inspiring, as we see the project resonates across so many different countries and cultures,” Kristian says.

Going forward, the focus for Campanyon will remain on growing in key markets in Europe to further establish their position as the leading platform for stays in nature, while continuing to enhance the user experience to become “campers’ best friend”.

Jeremy Sudibyo

Brand & Content, Campanyon

Years in Schibsted: 1

Our sonic attention is worth fighting for

The politics podcast “En runda till” (“One more round”) with Soraya Hashim, My Rohwedder and Lena Mellin is recorded in Aftonbladet’s studio in Stockholm.

Our sonic attention is worth fighting for

While companies around the world are engaged in an intensifying battle for users’ screen time, the rise of audio might be the next frontier in winning user attention.

Fuelled by wireless headset adoption and an ever-growing selection of content made for listening, the audio trend represents a major opportunity for any company that aims to be relevant during all those moments that users are away from their screens.

Although we cannot accurately predict how much total screen time (and news publishers’ share of it) will grow in the coming years, we clearly see that time spent on audio is growing rapidly. Around the world, more and more people listen regularly, and each person listens for a longer period of time.

In Norway, the share of users listening to podcasts per month has nearly doubled, from 24% in 2017 to 43% in 2020, with Norwegian-language podcasts leading the charge. Users aged 16 to 24 show the highest adoption rates, with listeners in this group averaging nearly two hours per day on podcasts or audiobooks. Among Swedish users in general, average time spent on podcasts and radio daily already matches that of digital news consumption.

Different from radio

While audio as a product is nothing new per se, there are many ways in which the current move to audio is different from traditional broadcast radio:

- It is fuelled partially by hardware adoption, led by AirPods’ exponential growth, having captured more than one-third of the wireless earbuds market. And several other wearable devices have also seen double-digit sales growth over the last few years. A 2022 report estimates that three in four US teens now own AirPods. The convenience of these new devices means people now wear headphones more often and in situations they previously wouldn’t – even while talking to their friends!

- Our mobile devices are always connected, enabling users to listen to any topic, any time, while doing other things. The ability to multitask is, as one would expect, one of the main reasons users turn to audio in their busy lives.

- Lastly, the sheer volume of content is growing rapidly, with an entire publishing industry transitioning to audio books, and all-time-high investments from tech- and media companies going into the podcast industry.

As users move to airpods for consuming content, we also see that several audio-first start-ups have emerged over the past few years. In addition, industry experts talk about wearable audio as the first mass market adoption of augmented reality devices. For many young users, audio is their primary channel for news. Clearly, publishers who want to stay relevant must find their place in the audio domain.

For news organisations, understanding the opportunity that comes with audio starts with acknowledging how the newspaper landscape has changed. We’ve gone from a world of physically distributed newspapers, where there was little competition and a general scarcity of information, to a world of unlimited digital distribution and global competition for attention. In this world, news organisations are not just competing against each other, but rather against any company distributing their product on a screen. Those other companies include technology giants with massive budgets and a world-class ability to get users addicted to their products.

News attracts users attention

We know that tech and streaming giants dominate users’ visual attention, and it seems unlikely that news publishers will turn the tide on that anytime soon. But in the audio world, news as a category gets an outsized share of users’ attention, accounting for 30% of top podcast episodes despite comprising only 7% of podcasts.

However, increasing audio content production for news organisations does not come without its challenges:

- The cost for voice actors and studio time remains high

- Recording and editing takes several times that of actual audio output

- There’s a risk of spending significant resources on content of low interest

The nature of news as perishable limits the types of content that can be produced without becoming outdated as stories evolve. Today, publishers mostly accept the fact that investments in the audio domain are expensive, and that it will be worth the effort in the long run. But there are also ways that technology can enable production of more audio in smarter ways.

Firstly, the need for studios may soon disappear, as cheaper and more mobile recording setups hit the market. Companies like Nomono (which Schibsted recently invested in) are challenging the existing workflow as well as the costs associated with high-quality podcast production.

Secondly, for narrated articles, we might soon get rid of the need for both studios and narrators entirely as text-to-speech technology matures. A synthetic voice that can read any text input out loud offers some unique advantages. It allows for unlimited production of narrated articles with near zero marginal cost, as it converts a written text into audio within seconds. Since it is connected to the publisher’s CMS, it also enables flexibility to update and edit published stories, without ever needing to step into a studio. The fact that it can be scaled across the entire daily article output of a newspaper also means that users can rely on the feature to listen to any article they prefer and do so while commuting or cooking at home. Since many users cancel their subscriptions because they simply don’t have enough time to sit down and read all the articles they pay for every day, solving this “bad conscience-problem” for subscribers might be a key factor in reducing the churn rates most newspapers are seeing.

Listeners complete more of a story

Early results from text-to-speech experiments in Aftenposten show that the gap between human and synthetic voices is closing in terms of listener retention, and that users opting for audio consumption complete more of each story compared to text. Plans for enabling users to save stories for later listening, as well as the ability to queue synthetically narrated articles after premium flagship podcasts, may all lead to more widespread adoption of audio as a mode of news consumption. The result might be a significant increase in the total time users spend engaging with Aftenposten’s journalism each day – read more about it on the next page.

Looking back at the battle for users’ screen time, as described earlier, could it be that by focusing on users’ eyeballs, we miss an emerging behaviour change that may one day account for most of our time? The next frontier in winning user attention might in fact be about sonic attention, and those who make the right investments now may be on a course to become the giants of the audio world.

Karl Oskar Teien

Director of Product, Schibsted Subscription Newspapers

Years in Schibsted: 8

An AI voice makes news accessible to everyone

An AI voice makes news accessible to everyone

Why limit the audio presentation of journalism to podcasts? Aftenposten’s cloned voice will be able to present all the newspaper’s content – and by doing so, give everyone access to the same information.

Today a large part of society is left out when it comes to consuming journalism. It is, in fact, a democratic problem that media prevents people from getting information about society because much content is only accessible as text. This is also a big risk for news companies, as they may be missing out on a market opportunity by not offering an audio alternative to the huge amount of written journalism produced every day.

According to Dysleksi Norge, between 5 and 10% of all Norwegians suffer from dyslexia. This means that as many as 270,000 to 540,000 children and adults in Norway are reluctant to consume written journalism. This is not the only group who have challenges with reading. People with attention deficit disorder concentrate better when listening instead of reading. Refugees and asylum seekers who are in the process of learning Norwegian also find it very helpful to be able to listen and read Norwegian simultaneously.

Students struggle to read

When Aftenposten started looking into this, we primarily had our newspaper for kids in mind – Aftenposten Junior skole. Since this is a news product for use in public schools, we are obligated to fulfil all accessibility requirements.

We learned from teachers that 92% of them have students who struggle to read in their classroom, and we were even told that schools were not interested in buying our product if we could not offer text-to-speech.

Two important observations and findings from our research also convinced us that adults in the future will have needs quite similar to today’s users of Aftenposten Junior skole.

Firstly, we observed that many kids, beyond those who struggle to read, actively chose to listen to the text. And today’s kids and teenagers are potentially future subscribers who tend to bring their media habits from childhood into adulthood. After observing how popular listening is when given the choice between sound and text, we are pretty sure that we need to have a sound alternative ready for them before they grow up.

Secondly, dyslexia and attention deficit are lifelong problems. This means that people who suffer from it will probably still prefer to listen to a long article instead of reading when they grow up, and they will not find our news products worth paying for unless we can offer more than text-based journalism.

A voice you can recognise

Our primary goal was to make an artificial voice with the highest possible quality. That is why we offer a cloned voice and not a purely synthetic voice. A synthetic voice is an artificial voice that is not meant to sound like a specific, real person. A cloned voice, on the other hand, is created in the same way as a synthetic voice but simulates the speech of a real person. That means that if it is a voice that is familiar to you, you will recognise the voice and may even struggle to understand that it is not a real person but rather an artificial cloned voice that’s reading the news for you.

To build an artificial voice we needed speech data. Speech data in this context is recorded sentences from our newspapers. Using our past articles, our collaborator, BeyondWords, extracted 6,812 phonetically rich utterances. These sentences were recorded by Anne Lindholm, a podcast host in Aftenposten, who is now also the voice behind our cloned voice.

After processing the speech data and training a neural network, the first version of the voice was ready – and it was impressive. Anne herself could not believe how similar it had become to her own voice. Still, as with all other AI-features, we needed to train it to improve it. By training we mean that a person listened to a huge amount of sound files that were converted from articles and reported mistakes.

A linguist from the company that developed the voice technology then made corrections to the phonemic dictionary that served as the foundation for the quality of the cloned voice. When a mistake is corrected in this way, the correction will affect all future articles in which the same words occur. Over the last few months, the voice improved a lot and we are soon ready to scale up so that you can hear the voice on many more Aftenposten articles.

Many benefits with a robot

When it comes to the quality of the voice, a real voice still beats the robotic one. But we have done A/B tests between the real voice and the artificial voice, and the results indicate that the quality difference is not very high and that the benefits with a robot voice outweigh the disadvantages.

One of the benefits has to do with the nature of digital presentation of news. When a dramatic incident first occurs, like the start of the war in Ukraine, the news gets updated from minute to minute, and it is impossible for a real person to compete with the speed of updating audio files with the cloned voice. Not to mention the cost of having a real person doing multiple recordings of an updated article, as well as the time saved for the journalists, who can instead focus on the next news article.

Artificial intelligence and our cloned voice have the potential to be revolutionary and make a hugely positive impact for large groups in our society who now can access journalism they could never access before.

This is why we believe that offering a robot voice based on artificial intelligence is an important bet on the future of journalism. It shows that new technology can contribute to a more open and inclusive society where everyone has access to the same information.

Lena Beate Hamborg Pedersen

Product Manager, Schibsted Subscription Newspapers

Years in Schibsted: 3

Tech trends in short 2023

Tech trends

If we look beyond the metaverse – what other tech trends will affect our lives in one, or five years? We gathered some of them, with help from Schibsted News Media experts.

Techlash 2.0

In the last few years, we’ve seen more and more regulation hitting the tech giants and big corporations in the EU, the UK and the US. The regulations put in place by the EU are expected to eventually be copied by the US and the UK, and there will likely be more laws put in place to hold Big Tech accountable.

Bye, bye passwords!

We are moving from endless lists of passwords and password managers will soon be replaced by biometric passkeys. The FIDO authentication credential that provides “passwordless” sign-ins to online services. Already widely used by Apple, think fingerprint scanning and Face ID, it’s likely that more companies will adopt the technology for using users’ biometric data to create safer login processes, making password leaks a thing of the past.

Social shopping becomes mainstream

Shopping hauls and unboxings have been a social media tradition for years on Instagram and YouTube, but TikTok – and its Chinese counterpart Douyin – have taken the phenomenon into the mainstream. Businesses, from clothing brands to restaurants, are livestreaming to engage with viewers, and they are seeing increased revenues from the social shopping aspect.

Service fragmentation will grow

We’re already seeing the streaming world become severely fragmented, with new services announced all the time. Though the giants may still have the lead, the competition is growing fiercer and the consumer has more choices than ever. We will likely see these developments in other spaces as well, as social media is well on its way and new apps for podcasts are fighting for the users’ attention, too. As users become more interested in niche platforms and products, the fragmenting of our digital services will follow.

The war for tech talent

The war on talent isn’t news at this point, but tech talent is an especially sought-after commodity worldwide. New ways of working and the ability to demand more from employers will have tech workers picking and choosing, while the companies work to improve their offerings, whether at the office or remotely.

Our time is value

We’re seeing an increase in the fight for the users’ time, not necessarily their money. For publishers and social media, attention and usage are becoming far more important in the long run, as exemplified by Netflix’s choice to make a cheaper subscription tier that comes with advertising. Of course, this is not a new phenomenon in the publishing industry, where advertising-based revenue versus subscription-based revenue has been the question for decenniums. The fact that a user’s time is considered more valuable is becoming common knowledge, and we’ll likely see that mirrored in more companies’ business models in the future.

Your home will be even smarter

Apple’s development of Nearby Interaction will likely spur similar new features from other companies. Nearby Interactions allows Apple users to connect to other devices and accessories depending on their location. Recently announced Background Sessions would enable users to use their accessories hands-free. For example, you could set your music to turn on when you enter your home or a specific room, or you can trigger other actions on connected accessories. This type of technology will probably grow more popular soon, making your smart home even smarter.

Vertical video is winning

TikTok keeps winning ground over other social media platforms, and the rest are left scrambling to keep up. The vertical video format will likely keep gaining in popularity, whether in short- or long-form. And vertical video is expected to be used in other formats as well, with its potential to make news products stronger as publishers work to engage with the medium. Many social media-forward publishers already have large teams in place for Instagram and TikTok, and there is no question that others will follow suit.

Regulation will pave the way for the future of crypto

Regulation will pave the way for the future of crypto

The crypto winter has made value sink drastically. But Karina Rothoff Brix, from the crypto service Firi, is certain that the crypto phenomenon will be a natural part of our trading culture and system – at least, once regulations are put in place.

To some people, crypto is the latest attempt to reinvent the fastest way we exchange money and goods. And when looking back on history, the evolution of money has always been moving towards more convenience and easier transactions. But crypto is so much more. Some even define it as the next revolution – not only for money but for the entire trading culture and system in our society.

The decline we see is, in my view, a normal part of the market cycles, which influences the perceived and traded value of crypto. But the value behind the crypto projects is increasing as innovation continues. Adoption is here – look no further than the number of ATM machines worldwide where crypto is easy to purchase, or the growing number of both private and public organisations that accept crypto as payment or as remuneration.

So, how did the industry grow from small crypto “nerd” projects to its current state, consisting of more than 13,000 different cryptos and an asset that you can pay your taxes with if you live in the state of Colorado, or purchase gas with when driving in Australia?

Several attempts before Bitcoin

We often hear that the story of crypto dates back to 2008, when the most well-known and oldest crypto of all was released with a whitepaper – Bitcoin. But there were several attempts to define e-money or digital currency before Bitcoin was invented or described.

It all began in the early days of the internet when David Chaum, in 1982, wrote a dissertation paper called Computer systems established, maintained, and trusted by mutually suspicious groups. At that time, David Chaum was a graduate student at Berkeley and his dissertation is the first known description of a blockchain protocol. His work laid the foundation for the crypto and blockchains we know today, and it was driven by his motivation to protect the privacy of individuals. A privacy that he early on feared that governments would not be able to ensure on the Internet.

David Chaum founded a company called Digicash, Inc. in 1989. His company attempted to release an e-currency called E-cash but failed and was then sold in 1995. The world was simply not ready for the technology – as the first online payment from a credit card was made in the early 1990s.

On its way

But the phenomenon was on its way. One of the first worldwide money, or digital currencies, was created in 1996. It was called E-gold and was backed by gold. The transactions were irreversible and approximately five million users were registered. But E-gold was quickly adopted by criminals who saw it as a safe haven, as regulation was lacking. Soon the currency was banned by the US government.

One of the first companies to succeed in offering a fast and paper-free transaction method using the internet was PayPal. Both PayPal and E-gold are like crypto in the sense that they use the internet to make transactions. But there’s one thing that is completely different. To simplify – cryptos are decentralised and both PayPal and E-gold transactions were controlled by a central unit.

A paper that made a difference

A milestone in the crypto story happened in 1997, when a researcher from the US National Security Agency (NSA) published a paper called How to make a mint, the cryptography of anonymous electronic cash. It described a decentralised network and payment system.

The concept described in the NSA paper was further developed by two researchers in 1998. Nick Szabo created what he called Bitgold, which introduced the concept of smart contracts to the system. Wei Dai wrote B-money, an anonymous distributed electronic cash system, which described the fundamentals of all the crypto systems we know today. Nick Szabo later helped the founders of Bitcoin code the system based on his findings, and Wei Dai’s work was also cited in the Bitcoin’s paper. Today, the smallest unit of Ethereum (ETH) is called a Wei.

A more secure system

But it wasn’t until October 2008 that Bitcoin became the first operating crypto currency, after adding blockchain technology. This was in the middle of the financial crisis, and some say that the ambition was to create a more secure and sustainable system that could not be manipulated by centralised entities. With a fixed amount of Bitcoin being produced, the mission was also to protect against inflation.

The Bitcoin vision was published by Satoshi Nakamoto, and it described a purely peer-to-peer version of electronic cash that would allow online payments to be sent directly from one party to another without involvement from a financial institution. The idea was to change the protocols that the financial institutions were building on and transfer funds instantly, anonymously, and without middleman fees and governmental surveillance and control. In January 2009, the first block of the Bitcoin blockchain, called The Genesis, was made.

The first real purchase with Bitcoin was made on May 22, 2010. The pizzas purchased with it became historical because until that point, the Bitcoin did not have a value but had only been transferred between peers – and mostly for fun.

The creator disappeared

Satoshi Nakamoto was nominated for the Nobel Prize in Economics in 2015, but he disappeared shortly after making Bitcoin. No one has yet been able to identify who’s really behind the paper or who is Satoshi Nakamoto. Before disappearing, Satoshi Nakamoto chose a software engineer to oversee the building of Bitcoin’s original coin. His name is Gavin Andreson, and he later founded the non-profit organisation The Bitcoin Foundation. As with Ethereum and its honouring of Wei, the smallest part of a Bitcoin that can be sent is called a Satoshi.

In the years that followed Bitcoin’s entrance on the market, the usage spread, but not only to legitimate businesses. Once again, governments had to shut down several illegal websites. The idea that crypto is only for criminals is a sticky myth for the industry to rid itself of, and the need for more detailed regulation is growing.

With a market capital of more than USD 3 trillion at its peak in 2021, the crypto industry is becoming an asset with which our society needs to handle and interact.

Legal tender in two countries

Two countries have made Bitcoin their legal tender. In El Salvador, Bitcoin has been the national currency since September 2021, along with the US dollar. Every citizen in El Salvador has a digital wallet with BTC in it, and it is mandatory for all merchants to accept BTC as payment.

The small African country of Central African Republic also voted BTC as their legal tender in late summer 2022, along with the franc issued by the French government. Many among the population, primarily in African states, are “unbanked”, and crypto payments give them access to trades and the basic service of securing their money and receiving payments for goods.

Close to 90 other countries are in the process of deciding the role of cryptos in their jurisdiction.

Regulation in place 2024

Retail crypto investors are also increasing in numbers. In 2021, 8% of American households had invested in crypto; in the Nordics it was between 11 and 15%. The growth is expected to increase with global adoption, along with the EU crypto regulation that is expected to be in place in 2024. With this regulation,

institutional money is expected to be a significant part of the growth for the crypto industry going forward.

Another powerful driver for adoption is Web3 – the next generation of internet. Web3 is expected to be largely built on blockchains, meaning crypto would have an essential role as a digital asset – not only for transaction of payments. In essence, Web3 provides all industries with new virtual markets where the technologies enable people to interact and transfer ownership in virtual settings, seamlessly and conveniently.

The pure digital presence, the virtual interaction, and the gaming habits of younger generations in Western countries show us how owning digital items and being part of virtual events is perceived to be just as real and as valuable for this generation as experiences and assets in the physical world.

This, combined with technology, talent attraction and funding in this space, lays the groundwork for the innovative and disruptive businesses of the future.

The definition of crypto

The use of the word “currencies” when talking about crypto can be misleading because crypto is much more than currencies. A general definition of crypto is: “Digital assets on a blockchain, that can be traded, utilised as a medium of exchange and used as a store of value”. The use of each crypto can vary and be coded to enable different – or multiple – things:

- A security token where the token holder owns a part of the entity that have issued the token.

- A utility token where the token grants an option or a right to the token holder.

- A commodity token where the token represents ownership of another digital or physical asset.

- A governance token where the token represents the token holders right to vote or in another manner be part of the governance in a project.

Karina Rothoff Brix

Country Manager Denmark, Firi

Years in Schibsted: Almost one

Stuck in the world of big tech

Stuck in the world of big tech

It’s been a rough few years for a handful of US tech companies, due to a seemingly endless stream of scandals and harsh criticism from politicians on both sides of the Atlantic. The result? “Big Tech” is bigger than ever. But what if they have only started to flex their muscles?

Several executives reacted with shock, according to the people in the room. The proposal meant crossing a line, unleashing a hitherto unused weapon. The code name was “Project Amplify”, and it was a new strategy that social media behemoth Facebook hatched in a meeting in early 2021, as reported by the New York Times. The mission: to improve Facebook’s image by using the site’s News Feed-function to promote stories that put the company in a positive light.

The potential impact is enormous. News Feed is Facebook’s most valuable asset. It’s the algorithm that decides what is shown to users when they log in to the site. In essence, it is the “window to the world” for their users, who, totalling nearly three billion, constitute more than a third of all humans on planet Earth.

“Truth” is now the same as “what makes Facebook look good”

For many years Facebook founder Mark Zuckerberg defended the company’s policy on free speech with the mantra that the social network should not be the “arbiter of truth” online, i.e., they would not censor content that people posted. Critics would say that Facebook has been doing this all along, letting its algorithms prioritise what is presented to users. What shows up in the News Feed is what people perceive as important, a form of personal truth for every individual. “Project Amplify” would mean something entirely new. By actively promoting positive news stories about the company, “truth” is now the same as “what makes Facebook look good”.

Silicon Valley veteran and social media-critic Jaron Lanier referred to the major social media networks as “gigantic manipulation machines”, possessing the power to alter emotions and political views among billions of people by pulling digital levers. Now Facebook has decided to use its machine for its own purpose.

We will return to the implications of this, but first, it is important to understand why Facebook and Mark Zuckerberg would want to do this. It is no bold assertion to say that Facebook’s public image is in acute need of a facelift. The company has been plagued by scandals for years. In 2018, it was revealed that the company Cambridge Analytica had harvested data from 87 million Facebook users, data that had been used in Donald Trump’s presidential campaign. The revelation not only tarnished Facebook’s reputation, but it also had real financial consequences. When the story broke in March 2018, Facebook’s stock tanked. In July the same year, Facebook announced that growth had slowed down due to the scandal. The stock fell 20 percent in one day. In a few months, 200 billion USD of the company’s market capitalisation was wiped out.

Facebook’s reaction can be summarised as follows: we are sorry and promise to do better. This has been repeated every time new, negative stories about the company emerge, such as the spread of disinformation, the negative impact Facebook’s product has on the mental health of young people, and how the network was used to instigate genocide in Myanmar, among other things.

If the stock market is a reliable gauge of the future, and it often is, the conclusion is clear: these companies are untouchable

But behind the many apologies it seems Facebook has continued with business as usual. In September 2021, the Wall Street Journal published “The Facebook Files”, a damning investigation showing that the company, including Mark Zuckerberg, was very aware of the harm the platform was causing. The company’s own researchers identified problems in report after report, but the company chose not to fix them, despite public vows to do so.

From the company’s perspective, its strategy has been a success. Advertising revenues have continued to rise and in autumn 2021, Facebook’s stock market value broke one trillion USD, double of what it was before Cambridge Analytica.

The same can be said of the other tech giants. Companies including Google, Amazon and Apple have been at the crosshairs of public debate for years, both for alleged abuse of their dominant market positions and for the negative effect their products and business models can have on people and society.

But if the stock market is a reliable gauge of the future, and it often is, the conclusion is clear: these companies are untouchable. Despite a storm of criticism, court cases and billion-dollar fines, stocks have continued to propel ever upwards. How is this possible? Let’s start with breaking down the different ways Big Tech dominates the world today.

When discussing this topic, parallels are often drawn to the influential corporations of the late 1800s and early 1900s, Standard Oil for example. These comparisons are misleading. Standard Oil and its owner John D. Rockefeller could never dream of the amount of power that rests in the hands of the Silicon Valley-titans of the 2020s.

The new economic superpower

In 2010, the total market cap of Apple, Google, Microsoft, Facebook and Amazon was more than 700 billion USD. That was equivalent to the GDP of the Netherlands. The ascent had been amazingly fast; at this point Amazon was 16 years old, Google twelve and Facebook only six. In autumn 2021, their combined value had reached 9,500 billion USD, more than the GDP for Japan and Germany combined. The total annual revenue for these five corporations is north of one trillion dollars, more than the defence budgets of USA, China, and Russia combined.

The market superpowers

Facebook owns four of the five largest social media networks in the world. Google, owner of the second largest (Youtube), has a 92 percent market share on search. Apple’s and Google’s operating systems, IOS and Android, control 99 percent of the global smart phone market outside of China. Apple takes in 65 percent of the global revenue on mobile apps, and Amazon has 50 percent of the e-commerce market in the US, as well as 32 percent of the global market for cloud services, followed by Microsoft. The list goes on. This not only creates huge profits but also creates an enormous asset in form of the 21st century’s most valuable commodity: data.

The innovation superpowers

Up to 50 percent of the venture capital raised by start-ups circles back to Google and Facebook in the form of advertising, almost as a “tax on innovation”. If new, competing services emerge, Big Tech can either try to buy them or launch competing products. Their headway in terms of resources and user base makes it extremely difficult – if not impossible – to pose a real threat.

The perception superpowers

Twenty years after the 9/11 terrorist attacks, one in 16 Americans believe the US government knew about the attacks and let them happen. Conspiracy theories and disinformation have become the new normal, and research has shown social media plays an important role. What Google and Facebook choose to allow, or not allow, on their platforms shapes our view of the world. In 2012, Facebook conducted an experiment among 700,000 users to see if their states of mind could be altered by changes in News Feed. The answer was yes.

The infrastructure superpowers

In December 2020, Google went down, meaning users could not access Gmail, Google Docs or Youtube. Although the outage only lasted 45 minutes, it made headlines all over the globe. The same thing happened to Facebook in October 2021. As an expert said to CNN: “For many people Facebook is the same as internet”. After the 2008 financial crisis it was clear that a small number of banks were “systemically important”. This is now very true for Big Tech. Serious disruptions in their services would quickly have severe and costly consequences.

The political superpowers

Big Tech has surpassed Big Oil as the biggest spenders on lobbying in Washington D.C., with an increase in spending from 20 to 124 million USD between 2010 and 2020. In the election cycle of 2020, a total of 2.1 billion USD was spent on political ads on Facebook and Google. In a manifest published in 2017, Mark Zuckerberg noted that in elections across the world “the candidate with the largest and most engaged following on Facebook usually wins”. In other words: use us or you lose.

The capital markets superpowers

It could be argued that the stock market has become the most important gauge for global decision-makers. It takes decades to make them do anything to combat climate change, but if stocks drop dramatically, decisive action from politicians and central banks are delivered within days or even hours. This was last seen in early 2020, when fears of the economic impact of the pandemic brought the Dow Jones down by 13 percent in one day. The direction of the stock market is, in turn, more and more intertwined with that of Big Tech. Apple, Google, Facebook, Amazon and Microsoft constitute a quarter of the S&P 500 index.

The AI superpowers

“Dark patterns”. That is what scientists call the tricks that digital companies deploy to manipulate users. Sometimes the purpose can be quite trivial, like making people sign up for a newsletter or share their email. The point is that you as a user do something that you didn’t intend to do. With artificial intelligence these tools become more and more powerful and potentially deceptive. The more data an AI-algorithm can use to train on, the more effective it becomes. This places Big Tech in a unique position to use these techniques. The problem with this is best summed up by Meredith Whittaker, a former Google engineer and now head of the AI Now Institute at New York University: “You never see these companies picking ethics over revenue.”

In all the ways mentioned above, the power of Big Tech is growing bigger every day. It is important to say that not everybody thinks this is a problem. However, it seems like there is a consensus among democratically-elected leaders in both the U.S. and Europe that the influence of these companies must be reined in. The U.S. and the European Union recently agreed to take a more unified approach in regulating big technology firms. In fact, even the people who work in the industry share this view. In a survey of 1,578 tech employees made by Protocol, 78 percent said that Big Tech is too powerful.

So, what can be done? A variety of options are already on the table, from forcing companies to break up to altering laws that give social media companies a free pass compared to traditional media. If the New York Times publishes hate speech they are liable, when Facebook does the same, they are not. In the US, this legislation is referred to as “Section 230”, and there is a debate around whether to change it. At the same time, numerous lawsuits have been filed around the world against the Big Tech-companies on anti-trust issues. The stock market has sent the message that the idea that any of these measures could seriously harm these companies is simply unfounded. And that view could very well be justified. There are several reasons why Big Tech-titans can sleep well at night. Let’s run through some of them.

Breaking up is almost impossible

The businesses of Big Tech are deeply interconnected. It would take years of litigation to make such a decision a reality. With 300 billion dollars of annual profits, the legal coffers of Silicon Valley are limitless.

Fines would have to be astronomical to make a difference

Between 2017 and 2019, the EU slammed Google with a total of eight billion USD in fines. That is less than seven percent of the company’s pre-tax profit during those three years. As the stock market often regards fines as a “one-off”, it is not clear if even larger fines would hurt the market cap at all.

Too drastic of measures could trigger a stock crash

In theory, politicians could of course make new laws that severely hurt Big Tech. This would very likely lead to correction of their stock prices, which in turn would weigh heavily on the start-up ecosystem and the economy at-large. To have voters lose trillions of dollars, or even worse their jobs, is not a price any politician is willing to pay.

The companies could fight back

This is the most underestimated scenario of all. What if Google eliminated negative news stories about Google from searches, or they monitored Gmail and Google Docs to stop whistle-blowers or investigative reporters?

What if Facebook took down the accounts of politicians who are critical of Big Tech? What if Youtube only recommended documentaries that showed how fantastic Silicon Valley is for humanity?

This might all seem rather dystopian, but the question must be asked. After all, anything that can be done with technology tends to be done. With Facebooks “Project Amplify”, this is already inching towards reality. Most importantly, what could anyone do to prevent this? The answer is: nothing.

As things stand now, Facebook and Google are controlled by Mark Zuckerberg and Larry Page/Sergey Brin who own more than 50 percent of the voting power. An American president can be thrown out of office, but no one can sack Mark Zuckerberg. And the reality is that Big Tech can use the power of their platforms for more or less any purpose they please. As Facebook whistle-blower Frances Haugen told CBS 60 minutes:

“The thing I saw at Facebook over and over again was there were conflicts of interest between what was good for the public and what was good for Facebook, and Facebook over and over again chose to optimise for its own interests, like making more money.”

To satisfy Wall Street, Big Tech-giants must deliver constant growth and more profits every year

Here we arrive at the crux of the problem. Silicon Valley’s algorithms govern the world, but these giants are in turn governed by an even more powerful algorithm: the paradigm that is called shareholder value.

To satisfy Wall Street, Big Tech-giants must deliver constant growth and more profits every year. And in the choice between ethics and profit, the answer is, more often than not, profits.

Silicon Valley author and entrepreneur Tim O’Reilly has called Big Tech “slaves under a super-AI that has gone rogue” – meaning the financial markets.

Breaking out of this cycle is easier said than done. Companies like Apple, Google and Facebook use their stock to pay their employees, which means they are highly dependent on stock prices rising.

But bad ethics also runs the risk of alienating these same employees. Internal protests have rocked Google, Amazon, and Microsoft in recent years.

Hurt society or hurt the stock price? Lose staff over scandals or over bad pay? These are the dilemmas that the most powerful companies in history face. Whether Big Tech has really become too-big-to-stop remains to be seen. Ultimately the power rests with you. Without the billions of daily users, Silicon Valley’s influence amounts to exactly zero.