Artificial intelligence

Artificial intelligence

AI is already surrounding our lives, delivering really great product experiences. It can recognize human speech, spot fraud or drive a car. Azeem Azhar explains what lies behind the AI-boom and why it concerns us all.

The idea of “thinking machines” or artificial intelligence (AI) seems ubiquitous now. We’re surrounded by systems that can mostly understand what we mean when we talk to them, sometimes recognize images, and occasionally recommend a movie we might want to watch. These developments are the result of 60 years of fluctuating interest, progress and respectability. Today, it’s undeniable that the awareness around AI is at an all time high; so, is it finally time for AI to pay dividends? The short answer is”yes”. But why now? And why does it matter?

The term AI was first coined in 1956, when science had tamed the atom and the space age was about to begin; AI was seen as similarly tractable, leading Marvin Minsky, cognitive scientist at MIT, to predict that “Within a generation […] the problem of creating ‘artificial intelligence’ will substantially be solved”.

Sadly, AI was much harder than rocket science, and while rockets landed on the Moon, belief, interest and funding for AI research crashed back to Earth, leading to the first of many “AI winters”.

What the original AI researchers tried to create was AGI, or artificial general intelligence: a computer as smart and flexible as a human, able to perform any intellectual task that a human being can. Meanwhile, science fiction writers were stoking fears about ASI, or artificial super intelligence: computers smarter than humans. These ASIs would either be man’s ultimate nemesis, such as Skynet from the Terminator film series, or our guardian angels, like the Minds of Iain M. Banks’ Culture novels.

The AI we see today is ANI, or artificial narrow intelligence. ANI specializes in just a single area, so that an AI like Google’s Alphago can beat the world champion at chess, but would be beaten by a three-year old at recognizing photographs. Baidu, the Chinese Internet giant, has developed an AI able to transcribe speech better than a human, but it’s incapable of opening a door, let alone playing chess. This is because AGI is hard. Things most humans find difficult, such as math, financial market strategy or language translation, are child’s play for a computer, while things humans find easy, such as vision, motion, movement and perception, are staggeringly difficult for them. Computer scientist Donald Knuth summed it up by saying “AI has by now succeeded in doing essentially everything that requires ‘thinking’ but has failed to do most of what people and animals do ‘without thinking,’”– but only because our skills have been optimized by millions of years of evolution.

You can go an awfully long way with ANIs, however, and they’re now everywhere in our world. If you use an Iphone your usage and preferences are modeled by an AI known as a deep neural network running 24×7 on your phone. If you take photos on an Android device then Google Photos uses AI techniques to recognize your chums and describe your pictures. The world’s largest technology firms have recognized that AI builds better products, better products mean happier users, happier users mean higher profits.

ANI systems can recognize human speech, describe images, spot fraud, profile customers, reduce power consumption or drive a car. So it’s no surprise that Apple, Google, Facebook, IBM, Twitter and Amazon have been busily buying up the top AI startups and hiring talent at a ferocious rate. In September 2016 Apple had a whopping 281 open roles for specialists in machine learning – an important sub-discipline of AI. Google counts more than 7,000 employees involved in AI or machine learning (or about 1 in 8). So, what’s behind the current boom? There are three accelerants that can be roughly divided into three categories:

1. Underlying technologies

Computing is now both powerful and cheap enough to carry out the complex mathematics driving the algorithms that underpin AI systems. Moore’s Law, which predicts the doubling of available computing power every 18 months, has helped, but so have new technology architectures, like the GPU (graphics processor unit), pioneered by NVIDIA in the late 2000s. Using GPUs, computations that once took days, now take just minutes – and that speed is still doubling every 18 months. The scale of change has literally been astronomical, today: Ten times more transistors are made every second than there are stars in the Milky Way galaxy. My Apple watch has more than double the processing power of 1985’s fastest supercomputer, the Cray-2, which back then cost nearly 20 million USD. Amazon rent out computing power equivalent to one hundred Cray-2s for less than 3 USD an hour.

Alongside Moore’s Law has been an explosion of data. AIs are a bit like children: They need to be trained, but they’re comparatively slow learners, requiring lots of data to learn to recognize even simple things like the letter ‘A’. Fortunately, 2.5 billion gigabytes (or 2.5 exabytes) of data are now generated every day – more than 90 percent of all the data ever created has been generated in the last two years.

2. Business and technology

The backdrop of this underlying technology has been the increasing computerization of business, prompting tech investor Marc Andreesen to coin the phrase “Software is eating the world”. This insatiable appetite comes from the realization that every business problem is now behind a digital interface. And as application programing interfaces (or APIs) have become the norm in digital interfaces, they make it easier for automated systems, like AI, to access them and control them. For example, Uber showed that running a transportation system was really a route optimization and liquidity management problem.

3. Feedback loops

The sum of these two accelerants is the previously mentioned AI lock-in loop, where great investment begets greater results begetting greater investment. Which is exactly what we are seeing: massive increases in performance taking AI systems over a tipping point, beyond which they can deliver a genuinely delightful product experience. And this is the point at which we care about AI: when it does better than a human. Speech recognition systems that are nearly as good as a human are just frustrating – we won’t use them unless we have to. Self-driving cars half as good as an average human driver are a no-go. But once self-driving cars are better, as Tesla’s data suggest they now are, we cross a boundary.

And this is where we are with ANIs. Across incredibly wide and broad domains, artificial narrow intelligence meets or exceeds human performance. And as it does so, the delight we as consumers get from these services draws us to those with the artificial smarts. So, it’s a bright shining future, then? Well, perhaps.

Luminaries such as Stephen Hawking and Elon Musk worry that super-intelligent AIs may ultimately threaten our survival as a species. That’s a subject for another essay, but the growth of AI presents some real challenges: The creation of natural monopolies that would be harder to escape than Google’s search monopoly or Facebook’s social monopoly. “Data network effects” favor those with the most data – will the rest of us become mere sharecroppers? Accountability for algorithmic decisions. Will these decisions be based on fair, balanced, diverse data or on biased data and shortcuts that discriminate against women, the poor and minorities? The redundancy of many previously human functions. Will AI cost people their jobs – self respect, social standing, etc. – as they develop and improve more and more skills?

It now feels as if AI has turned a corner, that it already powers parts of our everyday lives. But like some tragic Greek hero is doomed to become invisible the moment it succeeds. We underplay the remarkable scientific achievements of computers that can transcribe speech or drive a car. Indeed, as many in the industry have noted, “We stop calling it AI when it starts to work,” unfairly moving the goalposts when-ever AI looks likely to score. By many previous measures AI has not just scored, but has already won the game.

Blockchain

Blockchain

Satoshi Nakamoto probably couldn’t imagine the current craze about Blockchain when he designed the encrypted database that forms the basis of Bitcoin. In the high tech community its decentralized nature has revived the old dream of an open and equalitarian Internet.

Blockchain combines a decentralized database, strong encryption, and a proof-of-work update mechanism. It relies on a meshed network of computers to register transactions between anonymous participants inside a shared ledger in a secure, transparent and reliable way.

Blockchain secures both the ownership and the exchange of values through encryption functions, ensuring non-repudiation and proof. Everyone can read the database, which creates transparency. Finally, the distributed nature of the system makes it very resilient.

Quicker and less expensive

Compared with centralized systems like a bank, Blockchain has built-in trust and is quicker and less expensive for exchanging values. This technology has the potential to extend beyond Bitcoin to build decentralized applications that can manage transfers fast and securely. Developers throughout the world have in fact created applications leveraging the Blockchain in several areas: smart contracts, finance, legal, supply chain, the Internet of things, to name a few.

Ripple has created a Blockchain to manage international money transfer between financial institutions. It aims at short-circuiting the old correspondent bank system with a decentralized network of market makers handling currency exchanges. Its settlement infrastructure is quick (3-6 seconds instead of 2+ days for Swfit) and powerful (86 million transactions/day instead of 19 million messages/day for Swift). However, Ripple does not rely on proof-of-work but on consensus and is more distributed than decentralized.

Peer-to-peer trade

Openbazaar is an open source decentralized marketplace for exchange of goods that brings together sellers, buyers and arbiters. They want to free peer-to-peer trade by abolishing fees and restrictions set by traditional centralized marketplace players. Sellers list their goods on the Openbazaar client on their computer and the list is disseminated across the network.

Agreed transactions are managed by a smart contract under supervision of a moderator, that requires a majority of two over three parties to execute. However, Openbazaar doesn’t have an internal currency to incentivize the participants nor is it replicating the locally stored data throughout the network.

Ethereum is perhaps the most promising platform using Blockchain technologies. It allows for the creation of smart contracts, small applications stored in the network that run exactly as intended without any possibility of downtime, censorship, fraud or third party interference. Ethereum has its own cryptocurrency and provides the language to code contracts as well as the infrastructure to execute them. Unfortunately, Ethereum is more famous for its security issues, like the DAO hack that forced a hard fork, than for its real-life achievements.

As seen with these examples, the technologies known as Blockchain are still in their infancy and require intensive development efforts to improve. Investors have injected over 1 billion USD into Bitcoin and Blockchain startups between 2013 and 2015 but the flow of money is drying. Corporates also entered the game but with their own set of rules that translate into systems like R3 Corda that manages financial agreements between regulated financial institutions but is not decentralized, nor transparent, and relies on local consensus.

Overall, the Blockchain technology has the potential to revolutionize several industries. The progress made so far shows that it is hard to stick to the original design when extending to other use cases than Bitcoin. It is almost certain that the future of Blockchain is not in the Blockchain.

Virtual reality

Virtual reality

Gaming, sports, travel and entertainment are just a few industries exploring the possibilities of VR. Today several media giants are also experimenting at large scale. But will we all soon walk around with bulky headsets? And will we be able to cope with the intense experience of VR?

First Buzzfeed´s Ben Kreimer and his 360-video-camera-helmet went skydiving. Then they hit the floor of the Republican National Convention. The week after, the VR journalist was balancing on the roof of the Democratic National Convention, offering a virtual reality walk to anyone with a set of VR glasses. And on top of all that, he produced a well-received 360 piece from the the locker room of San Francisco´s baseball team, the Giants – that story got around six million views.

“Girls loved looking at the basketball players’ butts, we got a lot of comments about that,” says Ben Kreimer, smiling. Kreimer never paid any attention to the athletic butts of the team as he was filming the piece. “That´s the fun thing about this kind of content,” he says. “The audience will get something out of it that you never expected.”

Huge investments

2016 has been big for virtual reality. Tech giants have been investing huge amounts of money in VR devices, turning out one VR headset after another, each one fancier than the one before. As 2016 took off, the vision of Silicon Valley was loud and clear: Soon all of us will be strutting around with big, bulky headsets taped to our faces, living our virtual reality-lives full on.

But these expectations might have been a bit too lofty. Analysts have been fiercely debating just how quickly consumers will get into virtual reality, but there has been precious little data to inform the argument. The major players involved, including HTC, Facebook´s Oculus and Samsung, haven´t revealed official sales numbers of their VR-headsets, prompting some analysts to take a pessimistic view. For sure, a big group of gamers love the new technology – but what about consumers whose primary interest is news?

“Some argue that virtual reality is going to be a big thing for news organizations. I don´t agree,” says Joshua Benton, director of the Nieman Lab. The main argument for his bashing of VR in journalism is based on the way people interact with the news. News consumption is very ”lean back,” while interacting with VR takes effort, Benton asserts. “People consume news mainly to inform themselves,” he explains. “News should be quick to understand, easy to scan and simple to share. Today’s VR news meets none of these requirements.”

Ben Kraimer, the journalism technologist of Buzzfeed, agrees.

“Of course the hurdle is enormous,” he says. “Having to put something on your face instead of just pulling the Buzzfeed-app up on your phone is huge.”

Behaviors will change

He believes that the 360-content Buzzfeed is producing is mostly watched without VR headsets, and it’s designed to work this way. But things might change. As VR content across the board – in gaming, sports, travel, entertainment – will grow in volume, and as VR headsets become smaller, cheaper and easier to use.

“When you can use your VR headset for many different things, you´ll probably find it worth while to put it on for a couple of hours every night.” he says. “Maybe you are gaming for a while, then you switch over to watch the news or a movie in VR.”

Most big brands in news are experimenting with virtual reality journalism today, including The New York Times, The Guardian, The Economist, The Wall Street Journal and CNN. Some of them even have their own VR news app.

The discussion about the impact of VR experiences has been lively. During the game developer conference GDC in San Francisco this past March, Shuhei Yoshida, Playstation´s head of VR, warned about gaming and VR, even saying that virtual reality could be ”addictive”. He called for the gaming industry to be responsible as new games are developed.

“Some experiences are very intense.” he says. “I´m expecting some people to feel traumatised. There is an enormous power in this new medium.” News editors will have yet another parameter to consider with this new medium.

With 360 video cameras reporters can bring viewers into intense breaking news situations such as demonstrations, war zones and natural disasters. Which stories will be too much to cope with in virtual reality? And if a news organization decides to publish potentially controversial content, will it come with a warning?

“Let´s try things out,” says Buzfeed’s Kreimer. “Even if virtual reality journalism is not exploding in terms of hits right now, it pays to be a part of it. VR news is going…well, somewhere.”

Printing the future

Printing the future

3D printing is about to revolutionize production. It’s already being used in many different areas, from the car industry to medical treatment. It lowers costs and reduces production time dramatically. And if you keep a printer at home – you can always deliver toys to your kids.

Just when my son was born and I was spending my 15 days of parental leave at home with my family, I managed to disconnect from work e-mails and communication, and spent those little bits of free time looking for new exciting topics and technologies on the Internet.

That’s how I ended up backing a “low cost” 3D printer on Kickstarter, which was supposed to be delivered around Christmas. The official excuse I used to reduce friction with my beloved wife was that I was going to be able to print a lot of special toys for our new kid.

Believe it or not, that’s what my printers are doing most of the time even today: printing toys for my son, an almost three year-old kid who has already understood the concept of 3D printing. To him it’s a completely natural thing: he was born with a 3D printer in his house and can come up to me and ask “Print me a unicorn” the same way he could ask for a drawing or a glass of water.

Expertise and Patience

Having a 3D printer at home today is similar to having been a happy owner of a PC with dialup internet connection in the early 90s or an internet enabled phone before Apple launched the first Iphone in 2007. It’s still mostly a technology for early adopters, at least in the consumer space. Operating a desktop 3D printer today requires a certain amount of expertise and a whole lot of patience. Reliability is not there yet, and having to take apart some parts of you 3D printers to fix a “clog” is a relatively common task. But 3D printing as a technology, especially in industrial form, is becoming so pervasive that it’s starting to get hard finding domains where it has not been exploited yet. Out of all these new fields of application, there are some, where we’re starting to see the real potential.

Historically the cost of producing physical goods has been extremely high. Both in terms of gaining access to the expensive industrial machinery and because of the incremental cost for each and every change required during the prototyping phase.

The latter point is extremely relevant, since the typical lead time for injection molding, the traditional technique used to produce plastic parts, is known to be between 15 and 60 days. The use of 3D printing can reduce the lead time to a max of 2-3 days when using an on demand printing service and even down to a few hours when you own your own printer.

The cost is constant

On the cost side, things are also very interesting. Injection molding has a fixed ramp-up cost that makes it very expensive to print one single piece. This cost typically includes the cost of producing the mold, which makes it a suitable process when producing goods at scale. When using 3D printing instead, the cost of producing a unit is constant since the setup is pure software and the plastic filament used for every unit is basically the same.

The automotive industry has been one of the early adopters of 3D printing since the early nineties, when companies such as Land Rover were already using it to speed up the whole R&D process.

Today almost every automotive company is investing heavily in 3D printing and we have seen the rise of new concepts such as the Local Motors, ones that are likely to do to the car’s body and structure what Elon Musk’s Tesla is doing to the car’s engine: shifting to a completely new paradigm.

Local Motors is focusing on coupling the traditional manufacturing process for the car’s drive train with a completely new approach to producing the structure and the body of the car: 3D printing. This allows for a distributed network of mini factories compared to the more traditional and expensive huge plants that the car industry has traditionally been using, as well as a declared lead time of 44 hours for a new car.

This concept is still in its early stages, but it seems obvious that it will have a huge impact on how the car industry will be approaching manufacturing in the future.

The application of 3D printing in medicine is a reality and probably way beyond what most of us would believe. The realities is that today we’re not just able to print plastic, but also a wide range of more exotic materials such as tissue with blood vessels, ceramic that can be used to foster bone growth, muscle cells, ear cartilage, skull replacement parts, and even organs.

Even though most of these materials are still in a very early stage of development, ranging from being at the research stage in medical universities, to having been successfully used within a small set of human patients, chances are that the technology will allow even better medical treatments for what could be a very affordable price almost everywhere in the world.

This will open up opportunities for futuristic scenarios where a person could take a scan of a broken bone, upload it to a web site and get a custom made replacement bone shipped at his own address in a matter of days.

3D-printing will also generally produce less waste than the more traditional subtractive manufacturing, due to the fact that the amount of material needed in order to build a product using additive manufacturing is exactly the amount required for the product itself.

Another important aspect is the ability to recycle. Today there is a vast variety of materials that can be 3D-printed, some of them are not recyclable, but there are already a whole lot of alternatives that are becoming more and more apt to being recycled.

Tip of the iceberg

All these examples are just the tip of the iceberg of what is expected to become possible or even the norm thanks to broadening the access to 3D printing technology, especially in countries or areas where typically production costs have been so high that little to no innovation was considered possible.

Thanks to its ability to reduce production costs and lead time coupled with an extreme flexibility when it comes to what is possible to produce through 3D printing, we should really expect this technology and technique to become more and more pervasive every day, even though it will probably not mean that we’ll have a 3D printer in every home as it happened with the first PC revolution.

Creating a brand new lingo

Creating a brand new lingo

We have enormous amounts of data that is essential to create great products. But it’s not always easy to use. Big Brain is a Schibsted project to get the data to speak the same language.

“What gets measured gets improved”. You’ve probably come across this quote by Peter Drucker, or one of its variants more than once. Although you could debate the fact that not everything is measurable but still can be improved – truth is, it will certainly be easier to tell you are improving if you can measure progress.

It goes further than that, without measuring how would you even know if something needs improvement? How would you know what you should be focusing on?

The lean startup loop takes it to the next level and makes measurement a key step without which you can not learn. Ideas and opinions help you elaborate relevant hypotheses but only facts and figures allow you to validate them. It is by measuring experiments results that you learn and decide which changes are worth implementing and which are not.

At Schibsted, data scientists and product analysts are not just working closely with product teams, they are part of the team from the very start. Imbedded in product teams along with UX experts, their role is to help formulating hypotheses based on data exploration, set experiments, implement analytics tools and, most importantly, translate results into key learning to support decision making.

At the same time, because they are part of a wider analytics community within Schibsted, data scientist and product analysts leverage on their peers ideas, skills and experience. And the challenge is considerable. It’s not just about monitoring product performance based on global benchmarks – it´s about discovering strategic and actionable insights to support product decisions and grow our business, through advanced data exploration and analysis.

Understanding how our users interact with our products, what drives their engagement, what makes them come back or stop using us, fuels new ideas to grow our user base through acquisition and retention. Data analysis, combined with UX user research, also provides a better and deeper understanding of who our users are.

Knowing how specific groups of users behave, makes it possible to predict the likelihood of new users becoming active. We can then adapt our communication and product experience accordingly.

Now to the complicated part – all of this makes a lot of sense, but it is easier said than done. Facts on what users do – user events – are collected from different platforms, ex-ternals and internals, following different format and logic. It is almost impossible to tie data points back together to get the entire picture. Data cleansing and formatting comprises about 90 percent of the work of a data analyst.

For the same reasons, business intelligence experts in the past decade have strongly advocated for one single version of the truth. However diversity is not necessarily a bad thing. Quite the opposite. Keeping data in its richest form, allows us to use it for different purposes in different moments in time. As business and product change, what is true one day might not be true the next. Data you thought useless at some point might become crucial. Striking the right balance between data consistency, comparability and on the other hand local and real time flexibility, is a big deal.

With operations in more than 30 countries, speaking al-most as many different languages, how is Schibsted keeping this balance while answering the augmenting need for data throughout the organization? Big Brain. We started working on this project a little less than two years ago. The idea is simple, build a single data platform and a single data warehouse for the whole group, where data is stored following a common data model but where we also keep raw data to allow further data discovery.

In recent years, thanks to the much talked about “Big Data” technologies, the amount of data we can capture, store and process has fortunately exploded. We are now able to collect events from across a wide range of internal and third party platforms.

Today we have two types of data, behavioral data which consists of user events triggered from our apps and sites (visits, page views, the opening of a form to contact a seller, etc…) and content data, all piece of data related to content inserted into our transactional databases (ex, classified ads details such as category, price, title and text or purchase details of a premium feature such as quantity, types and payment mode, etc…)

Behavioral data, is captured using Pulse, our internal tracking system, and stored in a cloud-based data platform. The data format used is a combination of common events and customized events. That way we can have the best of both worlds. Critical events are captured in a similar way across all operations and markets, providing us with comparable data, while different teams have enough flexibility to track their own specific events as they wish.

Trailing the user journey

For content data, we first need to import content from our local databases before we can process it and add a common logic to it. Local databases, have to evolve according to local and specific product needs, which makes them hard to homogenize. Once raw data is stored into the data platform, we start cleaning and processing it and send it to Big Brain. When aggregating the data, we maintain both local and global dictionaries, so it is always possible for local teams to follow up their own metrics and keep track of historical data locally.

Basically, raw data is available at any time so we can always reprocess it. At the same time, because data follows a single format, thus comparable across markets and products, we can run common analysis and get useful benchmarks. Suddenly it becomes possible to trail the entire user journey, from their very first interaction with our different apps and sites.

None of this would have been possible without a strong collaboration with our local operations. Any top down approach would have failed to scale and provide benefits from day one. BI literature is full of gloomy big corp data warehouse endeavors. It is worth mentioning that when we started this project, the level of data maturity in our local operations was disparate and if some had very complete and well implemented data warehouses, other smaller operations had nothing at all.

It would have been unrealistic and counter-effective to set as an ambition to replace existing data warehouses and to stop local BI efforts. Right from the start we took an “open source” approach to the development of Big Brain. A core team would development basic functionalities, needed by all, without disrupting local road maps too much, and local teams would develop specific functionalities urgent to them but making sure it could be reused by other operations. Like building blocks, Big Brain functionalities, or “modules” as we call them, are assembled.

Focusing on data visualization

What’s next? We still have a long road ahead of us. We want to increase data collection, not all sites are currently providing data into Big Brain today and we will continue our effort to unlock access to relevant data at all levels of the organization. An increasing area of focus for our team now is data visualization. As more data becomes available, we can answer more complex business questions but we still need to find the best way to share findings throughout the organization. In that context, data visualization is no luxury! Having the right chart and graphs can save precious time understanding and turning data into action.

Schibsted has an amazing amount of data on user needs and behavior. This is a great foundation for creating successful digital products and services. It is also a huge challenge. To be able to use all data it needs to be comparable.

With a common platform, common data formats and common logic it is possible to trail the entire user journey through products and act to make them stay and come back. This is why data is essential and how it can be used to create real engagement.

The user journey

The user journey

Exploring the user journey is the most important tool for creating amazing digital products. Mapping this out will help you understand how to extend moments of happiness and how to turn frustrations into opportunities, says Lidia Oshlyansky, VP User Experience in Schibsted.

When building digital products you want to wow the users, and show them that products should always be more than just “good enough”. The key to doing this is understanding how people use the products and recognizing what they like and engage with, so that you can make each experience even better.

For product teams to really understand the what, the how and the why of our users and their product interactions, several things have to come together. First, we have to map their “user journey” – this is literally the path they take when using our products, as well as the before and after steps. Simply stated, this illustrates what leads a user to engage with products, what they do while there, and what they do after they leave. It’s also about how they think and feel during these interactions.

Does that sound a little far-fetched? With the help of our data scientists and product analysts the UX team can get an understanding of what users do, how they do it and why they do it. They help us map the existing user journey, and that map highlights places where things aren’t working as well as they could.

The product team doesn’t control the entire journey, users will often come from an external site, or leave our site and then return. Maybe they find a good ad on one of our marketplaces and decide to call the seller. They leave us and use their phone to make that call. Maybe they use the calendar on their computer to see when they’re free to meet the seller. We don’t control their calendar or their phone, so we have no control of their journey at this point.

However, observing users can reveal opportunities to help – perhaps by extending moments of happiness or “wow” factors and making them even more amazing. We can take the moments that were a little frustrating or didn’t work so well and turn them into opportunities by fixing the situation or really creating something meaningful that then becomes a “wow” moment. Remember the first time you clicked a phone number from an app or mobile website and your phone placed the call without you typing the number? What a lovely “wow” moment that was. Now it’s standard and expected.

Things that don’t work so well can, on occasion, give birth to entire new product ideas. For example, digital music streaming made it easier to “own” and share music without resorting to piracy; services like Lyft and Uber hope to help solve traffic issues and access to transport; electric cars were developed partly in response to rising pollution levels. We can all think of many such instances.

Spotting opportunities

When we know our users by quantitative measures (data science and product analysis) and qualitative measures (user experience, design and research) these opportunities for innovation and change are much easier to spot.

Quantitative data is showing us “what” is happening with existing products and services, as well as telling us a lot about “how” (what devices are being used? Which pages loaded? etc.) The qualitative side of things can provide the “why” of what users are doing and give a deeper understanding of the “how” (which device is used in a situation, in which room in the house? etc.) Combining the qualitative and quantitative gives a fuller picture of the entire user experience, fleshing out a user’s journey through our products, and events outside of them.

With this fuller picture we gain a sense of what isn’t working so well, returning us to the choice to either simply fixing things or finding a better way of doing things – innovating. Combine every possible source of knowledge about users and their behaviors and you’ll find the moments that make users say “wow!” In fact, this is such an important part of UX that we have it as one of our basic principles: Design for the “wow!” moments.

The Schibsted UX team has decided on a set of principles to support creating the best possible user experience. These are the user facing principles:

- Get to know me – Be meaningful to my life/event

Be helpful and familiar with me. Personalize when it makes sense. - Make me say wow

Wow factor – make me proud and delighted! Wow that was easy. Wow, that was important. Wow makes it addictive. - Earn my trust, and make things safe for me

Let me trust your brand without question. Build stronger relationships with me. Respect ME! - Anticipate my needs

Pioneer groundbreaking innovation. Stay one step ahead of my needs. Clear barriers in my way. Understand how I get things done. - Understand why I’m doing this

Recognize and respect my motivations. Give me something valuable in return for my data. Just ask for what you need not more. Get to know what drives me.

The $ecret b€hind paying

The $ecret b€hind paying

Finn Torget has gone from being a bulletin board to a portal handling transactions. At the same time the Norwegian marketplace created safer transactions and gained valuable user insight. Lasse Klein brings us behind the scene.

Finn Torget is a highly successful marketplace in Norway. It is like most other marketplaces built around the bulletin board model of connecting a seller with potential buyers and letting them handle negotiation, agreement, shipping and payment outside of the service.

To provide an even better service, Finn has created a service helping users handle the transaction in order to provide a higher degree of safety and trust for both buyers and sellers, and to gather valuable insights to improve the marketplace. One important step on this journey was collaboration with Schibsted payment.

Extensive research

To create a payment service like this, we started off with extensive research. We gathered research from the marketplace, analyzed user interviews and user feedback, and mapped out customer journeys with pain points to get an understanding of user needs.

Before a payment can be made, we need to know who is going to pay, and how much. This is trivial when buying from a store with fixed prices, but not so straightforward when trading between people with a negotiable asking price. Sellers may want the asking price, they may want buyers to bid, or they may be ok with haggling if it means selling faster. They may or may not want to handle shipping, and they may even be picky about who they want to sell to for items with sentimental value. Buyers may want to see the item first, they are likely to want to haggle, and they may need to have the item shipped.

Verification to build trust

We are required by European law to verify the identity of both parties for payment between people. Instead of just enforcing verification for payment, we made verification a separate feature to reduce fraud and build trust in the marketplace. More than 600,000 users have verified at the time of writing, and nearly half of all ads on Torget now belong to a verified seller.

The service lets buyers get in touch with sellers by sending messages through a chat interface in FINN. We added the offering functionality right inside the chat to allow users to finish their most common tasks without having to leave the conversation.

An intended side effect of this is that the conversation becomes a history of the sale; documenting everything that has been said and done for future reference – in effect a written agreement.

The system was visualized as a state diagram that shows all states in each negotiation phase with arrows between the states representing actions.

Creating discussions

The diagram has become a great tool for discussions on the user’s context in each step of the customer journey, and it served as a unifying element to align both developers and product owners on a common understanding of what we were building.

It also ended up becoming a model that mapped almost 1:1 between the user interface and server side code using domain driven design semantics.

We made a fully functional interactive HTML prototype for user testing before anything was actually built. Removing functionality from the prototype based on user feedback was significantly cheaper than having to do the same based on a fully developed application.

The two-sided nature of the marketplace made it possible for us to combine dogfooding and user testing in an amusing and interesting way in production. We negotiated and bought items from people without telling them that we were designing the product. This allowed us to interact with real people with real motivations, selling their own stuff in real life. Talking to these sellers afterwards gave us valuable insights that helped us improve the service – and it filled up our desks with stuff like card decks, games and Minion dolls.

The new conversational user interface for negotiation and payment has been live in some categories of Torget for a while now, and it is constantly being improved. In the near future we will be rolling out the functionality to the whole of Torget and to other FINN marketplaces, and later on we plan to deliver offering and payment as common Schibsted components for easy integration in the many other popular sites across Schibsted’s global ecosystem.

Ux dos & don'ts

Ux dos & don’ts

By now we know that good UX is crucial to win the hearts of the users. But how do you go about achieving that? Future Report asked the Schibsted UX team to share their best advice to reach success.

VALERIE COULTON

Title: UX Program Manager

Years in Schibsted: 5

I’m inspired by: Interfaces that work well and provide something beyond pure functionality.

- Share your work all the time, with a wide range of people.

- Stay on top of what others are doing, inside and outside of your sector.

- Practice empathy for internal users, leaders and stakeholders.

- Don’t let design critiques happen in an ad hoc way; structure the process for relevance and positive evolution of both design and designers.

- Don’t take your product design too far before checking that underlying assumptions are backed up with evidence.

- Don’t assume that leaders outside of UX don’t appreciate the huge impact you can have on the product from strategy to shipping. They may simply not realize what you can offer to each stage.

THOMAS DJUPSJÖ

Title: UX Designer at Tori.fi

Years in Schibsted: < 2

I’m inspired by: working closely within a highly competent team with a common goal and target.

Short answer: The people I work with

- Iterate, iterate, iterate. You seldom get things right the first time. Don’t be afraid of trying out new things and putting work aside.

- Benchmark and take a step back, look at stuff happening around you.

- Listen to and observe users, develop features that they use, not what they want.

- Don’t assume anything. Base your facts on user research, experimentation and iteration of new concepts.

- UX is always a balance between different areas (e.g. sales, user needs and trends). You can’t satisfy everyone, make compromises.

- Don’t underestimate or shoot down anyone’s ideas before you have evidence or proof that something doesn’t work.

AXEL HAUGAN

Title: Head of UX, Next Gen Publishing

Years in Schibsted: 4

I’m inspired by: Pop culture and people who have an infectious passion in any trade or field.

- Start: Get all of the ideas out of people’s heads immediately. They are pure gold for triggering other ideas within the team.

- Expose: Show everything to as many people you can find or have time for. This will add perspective and tell you if you’re on to something, or not.

- Launch: It will not be anywhere near as you wanted it. But it will be exposed to users. They will tell you what to fix first.

- Don’t overthink: Everything can’t be solved in your head. Almost any form of visual output will help get an idea to the next level.

- Don’t reinvent the wheel: It’s possible you don’t need to re-think the sign in-metaphor for every project. Good artists copy, great artists steal.

- Don’t play it safe: No great idea, no next level thinking, no revolutionary product came from being comfortable.

KAIJA OMMUNDSEN

Title: Head of UX FINN

Years in Schibsted: 11

I’m inspired by: Coaches that bring the best out of a team and make the most out of people. Go Iceland football team!

- Iterate between the big picture and details. Understanding of the solution as a whole is established by reference to the parts and understanding of each part by reference to the whole.

- Import good solutions from other settings to your context. Key learnings and concepts from other areas like sports, gaming or music can be very useful.

- Use the creativity around you. Be precise about the problem you want to solve, for whom and the feelings you want to achieve.

- Don’t stay in one perspective too long. People do not use one page at a time. They are on a journey.

- Don’t limit your thinking within the box and copy the market leaders within your category. If you do, you will never be in front of the game.

- Don’t be in love with your solution. There is always a better solution out there. Only the problem will stay the same.

PAULA MARIANI

Title: Head of UX @ Schibsted Spain

Years in Schibsted: < 1 year

I am inspired by: Users actually using our products in real life situations.

- Draw as much as you can! Paper prototypes are easy to make, cheap, and even therapeutic. They allow you to learn from users in a fast and effective way.

- Spend a lot of time together. Team members need to enjoy what they do. Generate spaces of freedom that are conductive to creativity.

- Iterate. It’s not about you, it’s about what works best for the users and the business.

- Don’t focus on quantitative data alone. Qualitative data is also important.

- Don’t work in isolation. Everyone is responsible for a great user experience.

- Don’t forget the “wow” factor. Being feature driven is so last year!

KRISTEL COVER

Title: UX Manager at Segundamano México

Years in Schibsted: 1.5 years

I’m inspired by: Fun and happiness, I believe that all products that we use should be a bit fun.

- Map your journeys and not just your flows, think of moments within your users’ life and what they feel or think while using your product.

- Learn excel and interviewing techniques, as a UXer, you’ll seldom need just one of them.

- Be a great PR person within your work environment. Your product will rarely depend only on your touch points, it will always need coherence across the silos.

- Don’t let your ego take the wheel on your decisions, stake-holders and angry users are wise sources for sucess.

- Don’t make decisions until you’ve seen both the numbers and the comments. Mix and match and draw good insights.

- Don’t let anyone on your team avoid taking part of the research, it’s the backbone of their comprehension of the goal and the path.

BENGT HAMMARI

Title: Head of UX & Design, Schibsted Publishing Sweden

Years in Schibsted: 10

I’m inspired by: products and services that disrupt markets, and change the landscape, by finding new ways to meet users’ and customers’ needs.

- Solve user problems and needs. Focus on outcome and impact, not output and building the next “trending” feature.

- Be insights-driven, not just data-driven. Data will tell you what users do, not why.

- Release early and often. Get early customer validation instead of late, releasing a featured-packed product with unknown user value.

- Don´t assume that the users are like you. It´s not very likely that the user you are designing for have the same needs and behavior as you.

- Don´t just design what users tell you they want. Figure out why they want it first.

MELANIE YENCKEN

Title: Head of UX, New Marketplace

Years in Schibsted: 1

I’m inspired by: Lean product development in small, cross functional teams.

- Make decision based on behavior not opinion – look at what your users do, not what they say they will do.

- Focus on delivering an outcome not only output – e.g. more searches performed instead of releasing a search feature.

- Explore and iterate – constantly push the mentality of revisiting things and evolving them.

- Don’t think of yourself as the ‘user’ – try to always check your idea with actual users and get out of the building to validate it.

- Don’t underestimate the power of a true delighter in your application – this makes or breaks experiences.

- Don’t assume UX is only the responsibility of the design team – it’s everyone’s responsibility in the business to help define our UX.

DAVID SNOW

Title: Head of UX, Schibsted Media Platform

Years in Schibsted: <1 year

I’m inspired by: How open, social tools have enabled people without any technical skills or training to create really useful stuff.

- Take time to bring people around you up to speed on all research and thinking behind your work. They can’t really contribute unless they are on equal footing.

- Every second week, watch your customers use your products. There is no faster way to rid ourselves of overconfidence than to see customers struggle with a feature that is ‘completely obvious’.

- Invite someone from customer service at the earliest stages of your design process. They will tell you in detail who our customers are, how we’ve failed them before, and how we can avoid making those mistakes again.

- Don’t be too proud of your own ideas, be proud of making sure the best solutions get to our customers.

- Don’t think of yourself as just empathizing with customers, think of yourself as demonstrating the power of empathizing with customers. You’ve done your job when team members that don’t even get close to touching the user experience passionately argue for customers.

- Don’t get too attached to any tool, methodology, process or approach. There is no silver bullet. (The real skill comes from seeing when each one is called for, and why.)

FILIPPO MACULAN

Title: Head of UX, Subito

Years in Schibsted: 4

I am inspired by: Services that know and foresee my needs, which I already know I am able to use.

- Subtract instead of adding, try to get to the essence out of the project. Verify your findings and, before adding anything, think if you can reach the same result by modulating what you have.

- If the unexpected marries experience to culture, great ideas can arise. Chance is necessary because it is outside of logic. With logic you can test things that already exist, with intuition, combined with play, there is a different approach.

- Communicate and share without thinking about it. A person is valuable for what he or she offers to the collective and not for what he or she takes from it.

- The final project is not everything. Focus on the path you are taking with your team to reach your goals.

- Don’t tell people you are collaborating with WHAT to do, but rather explain HOW they could do it. Always put new contents on the table.

- Don’t wait for the “official release” to show your product to your audience. Go to the streets and present your idea to people to observe their reactions. You don’t know your users until you face them.

LASSE KLEIN

Title: Head of UX, Payment & Identity

Years in Schibsted: 3

I’m inspired by: The maker movement, tinkerers and geeks.

- Design with a vision. You can’t test your way to a good product, but you can test and adjust a good design once you have it. The dialectic pendulum has swung from the old thesis of designers working from their own mind to an antithesis of UX-ers iterating their way up from user feedback. Can we arrive at a synthesis of a user- and design driven process?

- Reason from first principles, the Elon Musk way: ”Boil things down to the most fundamental truths and say, ‘What are we sure is true’… and then reason up from there”.

- Make functional prototypes early. Iterating on a thin but fully functional shell makes externalizing and early testing possible without investing in expensive backend development.

- Don’t lose sight of the user and the product. It’s easy to get caught up in the internal workings of a large company, and spend too much time on things that will never make it to the product, or forget that we’re also solving business goals.

- Don’t stop at the minimum viable product. Lean development is great, just don’t leave MVP out there – keep on iterating until you have a delightful product.

- Don’t spend time testing best practices. Some things can be taken for granted to free up time for innovation.

ANGELA LAREQUI

Title: UX Manager, Marketplace Components

Years in Schibsted: > 1 year

I’m inspired by: Having the chance to build products for the future.

- Get excited, fall in love with your product but always put the user first. Enjoy but stop and get back to the user and, even when it hurts. Design the product for them not for you.

- Create unique solutions to solve users problems. Look for inspiration beyond the main trends and out of your discipline. Inspiration is great but don’t let it hide your unique way of creating products.

- Be practical, be intelligent and take advantage of what others are doing well. Use tools, methods, resources, visual material that will help you minimize the process without compromising the uniqueness of your product.

- Don’t mimic. Mimicking others won’t really bring any attention to what matters.

- Don’t assume, confirm your hypothesis with data and users.

- Don’t work isolated for too long.

- Don’t try to rush in your career progression and don’t consider yourself senior enough. There is always something to learn.

KRISTIN VÅGBERG

Title: UX Manager at Blocket

Years in Schibsted: 2

I’m inspired by: Lean UX: Fail fast, work together and get outside the building to challenge your assumptions.

- Take time to investigate why you are doing a project. Decide on what will be the effect for your business and users, how you measure your success and which of your target groups will accomplish the desired effect. This will help steering your project and prioritizing along the way.

- Fail early! Test your concepts as early as possible to know if you are right or not. More than 50 percent of design ideas do not move you in the right direction for your business metrics.

- A great designer needs to be humble. Listen and include your team as much as possible in the design process. Hero designers belong to the era of waterfall processes – not for Lean UX.

- I´ts tempting to start a project with making detailed design – but it will slow you down. Always start with rough concept sketches and iterate down to detailed, so that your discussions with your team and stakeholders have focus on the important things.

- Don’t think that design doesn’t matter. A checkbox more or less in your check out can give your + or – of millions of dollars depending on the size of your business.

- Don’t design without knowing your users. It is the user who makes your product successful. Make personas posters and communicate them in your organization so that everyone knows them.

AMBREEN SUBZWARI

Title: UX Research Lead for Rocket

Years in Schibsted: < 2 years

I’m inspired by: What makes us all unique as user, how human diversity varies by culture, geographical location, environmental factors, languages and more.

- Involve stakeholders in user test sessions. This will give them empathy and understanding of the way users interact with the product.

- Record your test sessions. The recordings are a great source to help revisit anything you missed. Video clips also make an excellent resource to illustrate a particular insight or recommendation to your stakeholders.

- Be prepared to invalidate your assumptions/hypotheses: One of the exciting things about research is when users actions and feedback is unexpected and goes against your pre-conceived assumption.

- Don’t leave user testing to too late. The earlier your product is put in front of users the more likely it will be aligned with end user needs and wants, potentially saving a lot of time and money in big design and technical changes.

- Don’t make decisions based on feedback from two or three test users, wait until you’ve tested the design with all users. Researchers are looking for trends in user behavior rather than individual anecdotes or insights.

- Don’t spend too much time on writing detailed reports for regular test cycles: As many of us move towards a more lean approach to UX, our deliverables also need to be presented in a lean way. Construct a template that allows you to quickly and efficiently capture the core findings.

CAROLIN KAIFEL

Title: UX Manager @ Car Vertical

Years in Schibsted: > 2

I am inspired by: Great products that solve the users need before he/she even realized the need.

- Iteration is king – the product is never finished.

- Test everything – nothing should be released without being tested with real users.

- Usability trumps beauty.

- Don’t forget the context – users have different expectations on each operating system and device.

- Don’t get too influenced by your competitors – they are probably redesigning their whole product at the moment.

- Don’t forget to check the numbers – you need them for analysis and validation.

Next generation

Next generation

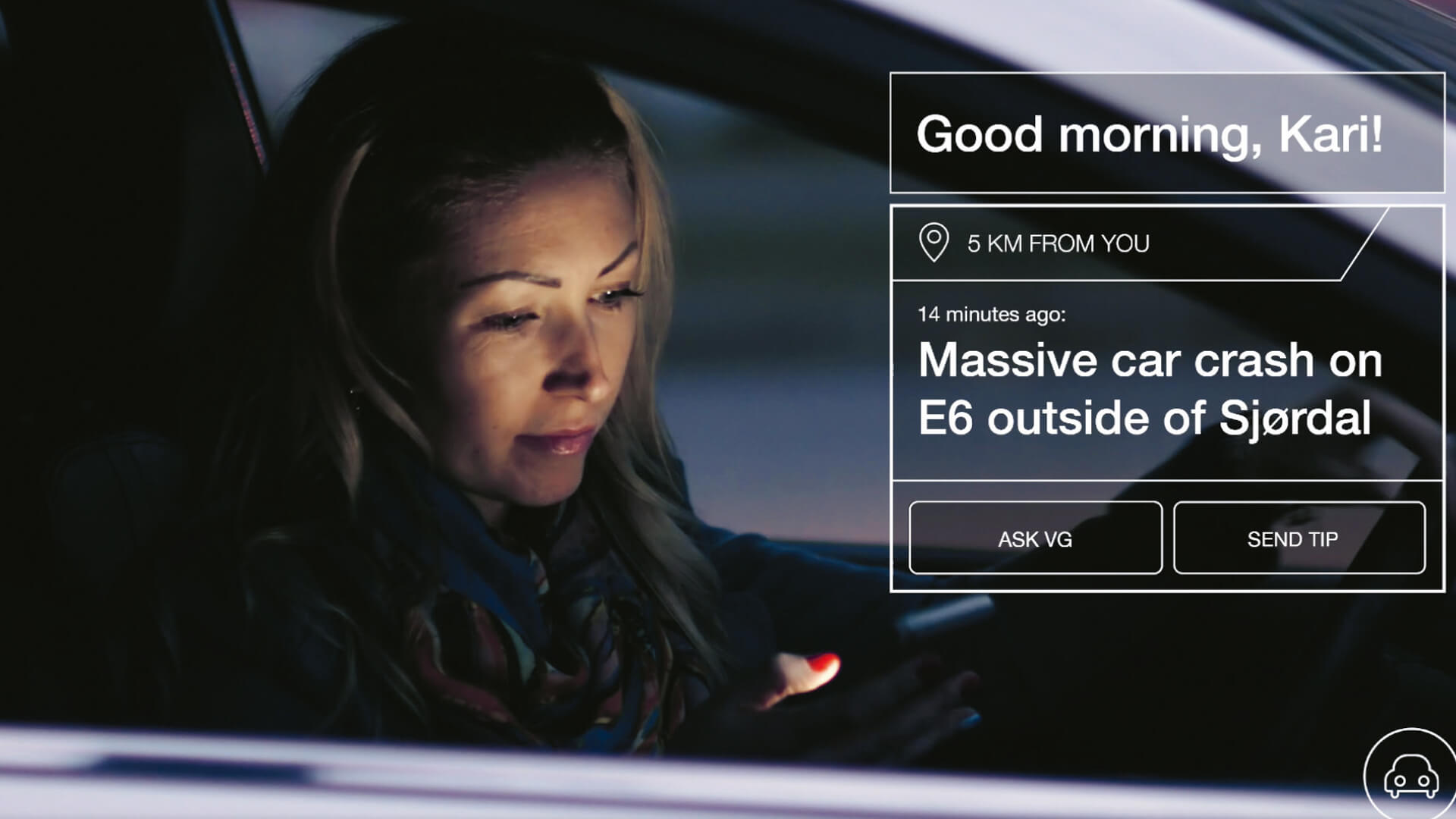

If you think the step from print to digital was challenging, prepare yourself: The next leap for publishing and journalism is far bigger, more complex and way more exciting. It’s about going from one-size-fits-all journalism to 1:1 journalism, says Espen Sundve VP Product Management at Schibsted.

All publishers are at a crossroads, whether they want to admit it or not. They are left with a simple choice: either lead the charge to redefine journalism and their products, or become mere content providers for external platforms, making them the de facto publishers of our time. To put this in Matrix terms I know my tech peers can relate to, publishers need to choose between the red pill or the blue pill.

Taking the blue pill means moving deeper into a role as pure content creators for third-party platforms – platforms that dictate the editorial and business rules without claiming any editorial and financial accountability for independent journalism. The drawbacks of this direction are pretty clear – this results in a great concentration of power around the platforms.

Taking the red pill means facing reality and creating an alternative – a reality in which the publishers reinvent their established products to remain relevant as destinations for their readers. A reality in which accountable editorial voices define the technology, and algorithms are used to serve and fund independent journalism.

Taking the red pill means taking a leap and reinventing established products into a new publishing suite that will be successful in the user engagement battle for years to come. At Schibsted we think of these as our Next Gen Publishing Products. But we’re no longer big enough to do it alone. None of us are. Either we all make this leap together, or we all end up swallowing the blue pill.

Strong digital positions

It’s not like publishers have completely missed the mark with established digital products. Many of us have managed to build strong digital positions for our established brands. But let me share a few of the established ”rules” that seem to prevent us from radical leaps towards a more engaging user experience:

- We create pieces of content meant for everyone, and manually curate our front pages.

- We have adapted the old print user experience to a desktop format, and later the desktop user experience to mobile.

- We’re content-centric in all that we do, not user-centric. One example: The user interaction model is to navigate by topic or format (not by user mode), and we primarily care about pageviews (not user events).

- We originate all content ourselves, making the idea of being deeply relevant to a very broad audience a very expensive affair

- Advertising is produced, served, presented and tracked outside the editorial content and technology solutions – resulting in a cluttered and slow-loading user experience.

- Journalists, business developers, designers and engineers devise and implement ideas to improve aspects of the product without working together, with no one responsible for the total product.

When we look at trends in consumption of online content and the ”rules” holding us back, we can sum them up in one major bottleneck: we are still broadcasting journalism, while new digital content distribution platform players such as Facebook and Google are offering deeply personal experiences.

But instead of merely throwing out the buzzword ”personalization” and pretending that we’ve found the solution, it’s essential to start by asking ourselves why we engage in journalism in the first place. How can our journalism be 10x or 100x more relevant if we could tailor it to each individual reader?

The true purpose of journalism

Being a technologist and somewhat new to the media industry, I’ve spent a lot of time recently trying to understand the true purpose of journalism. This is important, because if we technologists do not fully adhere to the foundational principles of journalism, we can never truly join forces with the newsroom. I’ve realized I can express the purpose of journalism in a way that will resonate with any technologist – as an optimization challenge:

Journalism exists to minimize the gap between what people already know and what they should and/or want to know – so that people can make informed decisions about their personal life, community, society and governments.

To help close that gap, we can simplify and say we do three jobs for the end user:

- We connect the user with a story

- We tell the story

- We engage and involve the user

To make the leap from broadcasting to 1:1 journalism, we have to innovate along all three of these dimensions. With a one-to-one relationship with each (logged in) reader, fueled by data collected on their behavior, context and preferences, we should be far more sophisticated in optimizing what we show to whom, and when and where we do it. We have two major advantages in this game vs. Facebook and the like.

First, we can be fully transparent in how we curate. We embrace editorial responsibility, so while tech platforms leave the user in the dark as to how content is filtered we can dare to be open about why and how we curate to close the gap between what you know and what you should (or want to) know. Second, we have journalists and editors and their inherent curatorial skills: While Facebook pays 30 contract workers and have 700 reviewers around the United States that assess and train the news feed algorithm, the publishing industry collectively has thousands of the world’s premier content experts – the journalists. By incorporating their assessment and know-how into algorithms defined and owned by publishers, publishers should be well-armed in the fight for user attention.

New form of storytelling

In telling stories, we currently create a single story for everyone. We believe media and journalism should break with this and invent a new form of adaptive storytelling. As the creators of content, we can and should capitalize on the competitive advantage we have over players like Facebook. The Facebooks of the world do not produce their content, they primarily focus on how to personalize the filtering of it.

Publishers, on the other hand, can start to personalize down to the level of content creation. Ideally, the stories I read should match my level of insight, interest, and past behavior within every topic, my preferred way of being informed (say, pictures over text), my current context and more.

If newsrooms dare to rethink what they produce (such as leaving articles behind for something more granular), journalism will be far more relevant. In engaging an audience, we’ve always invited them to contribute with opinion pieces and tips; recently, we’ve begun offering share buttons and comment fields next to our articles. Beyond that, we’ve mostly outsourced engagement to social media.

Technology companies are great at bringing users on a journey and connecting them for discussion. If media companies had better insights and data on their users, they could be far more sophisticated in how they tailor engagement options to users depending on their behavior, preferences and context. This would not only increase distribution and reach of the content, it could also provide valuable audience input to enable the newsroom to create even better journalism.

A personal editor

For our next-generation products, Schibsted has formulated a vision: They should deliver and tell news in a way that makes users feel like they have their own intelligent personal editor. Let’s pause for a moment on the word “editor.” The rise of pure tech platforms as a primary source of journalism and opinions presents us with a serious societal challenge. Tech platforms inherently neglect editorial accountability, and also do not curate content with any mission to challenge individuals with what they should know.

To define an intelligent personal editor, we mean:

- An editor who optimizes her algorithms, for example making sure some news reaches everyone, while other news reaches only the right niche audience.

- An editor who can help people understand complex, contemporary issues through personalized storytelling, matching each individual user’s preconceptions to understand and engage with the story.

- An editor who intelligently guides the user through a world of information overload, selecting and presenting relevant content from various sources.

- An editor who can be trusted to give users a balanced view of the world, avoiding filter bubbles while fostering dialogue.

- An editor who knows how to surprise and entertain users, not merely challenge and enlighten them.

- An editor who allows advertising to have the same great user experience as journalism.

- An editor who ensures that the user has a seamless experience across any device.

Let’s be clear: We do not yet have the magical roadmap that shows how we’ll get to 1:1 journalism. But we’re determined to build, test, validate, and fail or scale new products, processes and experiences.

If any of us are to be successful at curating relevant experiences for each individual user, we have to be able to pull from a wider volume of content. And not just any kind of content, but quality journalism, which can only be achieved by media companies making all content available to all other publishers. Furthermore, only by sharing user engagement data collection can we gain the full user insight we need to rival the data power of tech platforms. Not only do we need to collaborate on capturing data to be competitive in the advertising market, but we also need to allow the free flow of content between our brands to be more relevant and to maximize our collective reach.

Our curation process is still largely manual. Moving from print to digital, we invented “front editors.” Now we have to do it again, but this time, our front editor needs to tune algorithms rather than words and pixels. Instead of defining placement and format in curating content, they should be concerned with defining user segments.

Content creation as a weapon

The third hurdle lies in the way we create our content. In particular, we need to go beyond articles. The key issue is that our current formats don’t allow for adaptive storytelling – storytelling in which we adapt to users’ knowledge (what they’ve already read), interest level, context and more. Circa News paved the way for atomizing news, and the NY Times has written about particles in their blog. Furthermore, with conversational news apps paving the way for bots, our old article format simply won’t cut it. As tech platforms compete for user attention by redefining content distribution and engagement, our greatest weapon in the fight for relevance could lie in our core task – content creation.

Fundamentally, if we want to be better at engaging each individual user – bringing them on a journey from fly-by readers to a loyal and actively engaged audience discussing and adding value to the stories, we have to know who our user is. As of now, we don’t. The challenge to solve here is to give users compelling reasons to be identified (logged in). In doing so, we have to move beyond mere vanity features (”save this article for later”) and marketing campaigns (”log in to win an Ipad” or “get premium free for a week”). We have to make our product experience better if you’re identified, and this requires making journalism personal.

We are at a point where media and journalism have to take a stand. Publishers either must submit to the new rules defined by the pure tech platforms, giving them our content and data and making them stronger every day, or they must decide to evolve journalism into something that truly embraces the opportunities we have – the chance to invent true 1:1 journalism.

As we have evolved to move from print to desktop and from desktop to mobile, we now, together, must decide to embark on the mission to reinvent ourselves once more – at our core – before someone else does it for us. That is why we are investing in Next Gen Publishing products, because journalism is not disrupted by digital. It’s enabled.

Trend update since last year's future report

Is the IoT a hit and what about smart devices and the sharing economy? The Schibsted Products and Technology team helped us sum up some of the issues that they covered in last year’s report to see what happened.

New wave of start-ups doing one thing really well

The ongoing price war between Amazon Web services, Google and Microsoft for the dominant position in the cloud space, continues to bring prices down with new advanced offerings from the big players. At the same time the number of open APIs is growing, and this will continue to inspire 3rd party contribution on top of platforms operated by large players. There is also a new intelligent layer with tools emerging to orchestrate the large distributed computing and storage power of the cloud more efficiently and flexibly. These trends will result in a new wave of “no-stack” start-ups, i.e. companies that focus on doing only one thing really well and use other services for everything else

Always connected with smart devices

The official release of Apple Watch in 2015 marked the start of wearable commercialization. The competition to become “the next operating system” is on between Apple, Google and Microsoft. Now major high-tech players are starting to invest heavily into wearable smart devices and the numerous smart device projects on KickStarter show that the proliferation is approaching, leading to exponential growth of applications. Smart devices will keep us always connected to the Internet – “always on” – and will therefore collect much more data from our daily lives.

Data science - now for everyone

Data science is becoming an integral part of product development. And more advanced algorithms are available via off-the-shelf products. This enables companies further down in the value chain to use data science without extensive knowledge of underlying algorithms and companies can better focus on delivering good user experience. At the same time, the increasing attention to privacy and user aversion to intrusive data collection and application features, fuel the usage of anti-data analytics. On the other hand, users will require better transparency about how their data are collected and applied.

IOT – Personalization in the physical world

The emerging smart home had Internet connectivity embedded in everyday objects such as light bulbs, thermostats and control systems to facilitate control and coordination among objects. Now the concept is getting mature with commercial product releases from GE, Philips, Sony and Xiaomi. IoT will see extensive usage in both enterprise and consumer products with smart cars being the next area after smart home to get into commercialization. IoT is going to vastly increase data available real time to business and consumers, and personalization will move into the physical world. Different people might see different ads on outdoor banners – everyone having a personalized environment around them wherever they go.

The sharing economy keep disrupting

The strong economic drivers from efficiency will help the sharing economy models disrupt even bigger parts of the “ownership economy”. Uber’s 40 billion USD valuation shows that the capital markets have strong faith in this model and more traditional B2C verticals will face competition from the sharing economy model. More tools and supports have become available for building these products, and differentiation is the key to success going forward to survive. Some peer-to-peer platforms must first resolve the regulatory pushback (e.g. transportation permits, lodging tax, financial transaction oversight), and provide more end-to-end tools and services to ease individual compliance requirements.

User curation and cross-screen shopping in e-commerce

Customer expectations for quick delivery and hassle-free returns, combined with economies of scale, favor established players such as Amazon and create high barriers to enter the e-commerce area. There are two long known ways to differentiate from competition, and both still work: Focus on service – Amazon is pushing service to a new level with Prime Local Stores and Home Services. Focus on price – Alibaba and the hype of shopping directly from China proves that the low-price driver still works too. But first mover advantage helps local leaders win over Amazon in their local markets. Etsy, as the sole star in niche e-commerce, hints that the key to success is a discovery model powered by user curation, not just a niche product collection. Cross-screen shopping will soon be the new standard consumer behavior pattern, with more and more buying intention starting on mobile. And the emergence of call-to-action ad formats for e-commerce with one-click buying can change the e-commerce dynamics and challenge Amazon.

Identified web more crucial than ever

Login and identity data are more crucial than ever. Behind lies the urge to deliver the best possible personalized services and offers, and the fact that cookies are dying as traffic moves to mobile (apps) and wearables. Identity data is applied in social network and media personalization and targeted advertising. Large players are increasingly conscious to fully own it, and new industries, like telecom players, are entering the game. Login also becomes more important to advertising, as adblocking goes mainstream. On long-term a distributed identity, i.e. an identity solution that does not require one single company or institution as the central operator, built on the Blockchain infrastructure (a technology originated from bitcoin), might gain large scale traction.

Serendipity - In search of the human algorithm

The very same Internet revolution that created the global village has made bumpkins of all of us. A system of organization, curation and recommendation of information makes each of our views of the Internet more and more insular. This lack of diversity makes us lose sight of the benefits of serendipity that often leads to creativity and new ideas, says Professor R. Ravi who is examining how to design for coincidence.

Imagine the pleasure of browsing in a large musty bookstore with racks of undiscovered treasures. Now imagine that all the books were of the category that you particularly liked. What if the bookstore itself was full of just your favorite authors and styles you enjoyed? This is the intrinsic promise of the Internet and its vast collection of curated and classified information. In fact, a precursor to the conception of the Internet by Vannevar Bush in his article “As we may think” particularly calls out the ability to navigate this maze of information using hyperlinks that lead to related topics.

The sheer volume and scale of information available on the Internet has made categorization, indexing and searching the most important task, as attested by the influence and market power of the leading search engine company. In an attempt to make the information presented to a searcher more and more relevant, an important new feature was introduced by Google in 2009: search personalization.

Different results for different people

What this means is that the search results for the same keyword will be different for different people. The search results for “whaling” will be different for an investment banker and for an environmental activist, and it will also be different between environmental activists in Norway and the US. Mostly, the difference makes the results more relevant and convenient to sift through. A similar kind of personalized recommendations exist in most large repositories such as YouTube, Amazon and news websites. One of the promises of Schibsted’s SPiD system is the development of a similar personalization for logged in customers.

The reason why this starts becoming problematic is articulated fully in the book titled “Filter Bubble” by Eli Pariser. In short, personalization removes a standard frame of reference that is necessary for common dialogue among an informed populace. This is an important responsibility of journalism, media and information sites in a well-functioning democracy.

Removing the common frame of information reference puts each of us in our own filter-bubble creating echo chambers where we predominantly hear only from like-minded individuals. Even less noticed is the loss of the various opportunities to form serendipitous connections to new information which are important for cultivating a diversity of thought. Such a diverse frame of mind is an important precondition for creative invention which could be stifled by excessive personalization as well.

Myopic recommendation systems

If there are so many problems with personalization, why is it so prevalent? The answer comes from the design decisions made in the crafting of the clever software systems that allow us to navigate through information. A common metric of effectiveness in navigating systems (such as search engines, recommendation systems and relevance engines) is how much responsiveness (click-through) the automatic suggestions receive.