Millenials worry about online integrity

Millenials worry about online integrity

Millennials are aware that the data they leave behind is valuable – and they worry about how it is used. Future Report has compared their online habits in Sweden, France and Spain.

Millennials are the first generation to grow up surrounded by mobile technology and social media. They live in the moment and have been described as unattached, connected, free and idealistic. They also do their business in a time where personal data is currency. So, what are their view on integrity and privacy online? In this year’s edition of Future Report we find that Millennials in Sweden, Spain and France do care about their online privacy and that they do worry about integrity online.

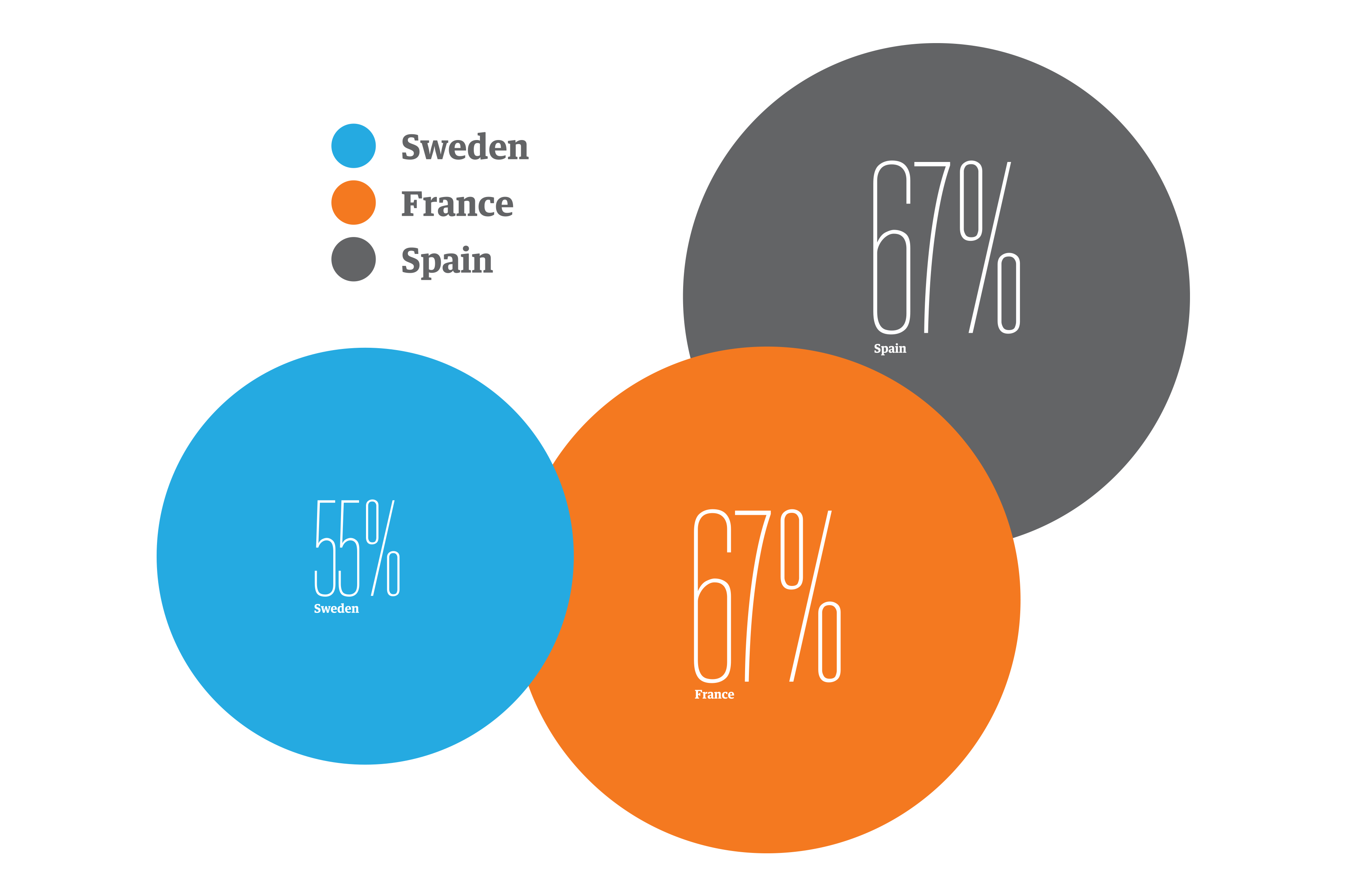

67% of Spanish and French millennials in our survey said that they worry that the information they provide on social media can be used to influence political views.

Millennials’ digital lifestyle includes a higher number of friends on social media profiles, they share their location with friends and family, but are less likely to share their location with businesses and employers. They are also more likely to delete social media profiles entirely. Millennials are known to have little trust in companies and brands. They don’t trust companies like Facebook, even though they interact with them and share personal information with them.

In the wake of the unexpected outcome of Brexit and the presidential election in USA, a discussion about social media, fake news and the use of psychographic data became heated. Combined with the upcoming GDPR regulation, digital habits related to data security are even more important to understand. Our study shows that a majority of the millennials in Sweden, France and Spain are worried about the fact that traces you leave on social media are used to direct messages in order to influence elections.

Careful about traces

Millennials are aware that the personal data they provide has a value. A majority in all three countries say they worry about what states and companies knows about them.

Overall, millennials are careful about the digital traces they leave. This is confirmed when we ask if they have changed the settings on their smartphone in order to protect their personal integrity. Many claim to have done so. And when it comes to ad-blockers, the vast majority say they use them.

Read the full report:

Future Report 2017 Final Version

Be prepared for the post privacy era

Be prepared for the post privacy era

The realization that the digital footprints we’re leaving behind are telling the story of our lives and our personalities has started to sink in.

But how does this really work, behind the scenes? Michal Kosinski, psychologist and data scientist at Stanford Graduate School of Business, knows this better than most. If you recognize his name, you might have heard it in connection with the Trump election. Kosinski’s research warned of the possibility of using data to influence voters in political campaigns. In the study conducted in 2013 Kosinski tried to see whether it’s possible to reveal people’s intimate traits just based on their seemingly non-informative footprints, like songs you listen to and your Facebook likes. And the answer is yes.

“Facebook probably knows more about you, than you do yourself”

“AI can be used to predict future behavior and help us understand humans a bit better. But one of the potentially negative implications is that it can be used to manipulate people, to convince them to do things that they otherwise wouldn’t have liked doing,” he explains. “Facebook probably knows more about you, than you do yourself. In the work that I published back in 2013 I said – look, this is possible.”

This is possible because a lot more than we actually publish on Facebook is recorded. Like your whereabouts, the messages you wrote but never sent, a friend that is stalking you, your spouse’s contacts, to mention some. “Maybe you never reported your political view on Facebook, but from your different actions and connections, Facebook will still know and tag your profile. Their reason is selling ads.” A lot of other industries have the same approach. Mastercard and Visa no longer define themselves as financial companies, but as customer insights companies.

“I’m a psychologist, I’m interested in human behavior and how we apply big data generated by humans to predict their future behavior and explain their psychological traits. But the very same models can be used to predict the stock market or the price of raw material or tech-hacking. There are consequences for media, for politics, for democratic systems, health care, you name it.” It’s AI:s that are putting the puzzle together. From all the digital traces we leave behind, they’re able to reveal patterns.

As humans we are only able to process a certain amount of information, and we don’t see all those links connecting characteristics and people. But the connections are there, and computers are excelling at putting them together, because they can tag patterns and they can aggregate those patterns. The very same predictions that can be made from digital footprints from Facebook, from language, websites and credit cards, for all those places where we interact digitally, can now also be made just on the base of your facial image.

“As humans we are predicting a broad range of traits from faces. From a fraction of a second of exposure, we can very accurately interpret an emotion. We have millions of years of training on this – because it’s so crucial for surviving. Being able to spot if someone is angry or very happy will determine if you should run away or hang around. We really don’t know how it works, basically your brain just does it.”

It turns out your face holds a lot of information. Gender of course, but there are also genetic factors such as the trace of your parents. Our hair reveals information on hormones, the tone of our skin, even environmental and cultural factors can be traced in our faces. And after just a few years of trying, computers are now great at predicting gender and age. And they have even become better than humans at the very human task of predicting people’s emotions. The problem is that we don’t really understand how this happens either.

“The mathematical foundation of the models is really simple; it’s basically a long equation, but with millions of coefficients, far too many for the human brain to comprehend. But the computers are able to spot those tiny details that humans fail to see, and add them up.”

In a recent study Kosinski also claims that AI now can detect sexual preferences, with a 91 percent accuracy. This has roared debate and been questioned, but still, there is no doubt AI already has abilities we could never imagine just a few years ago.

“When I’m talking about this in the western free market, people think of how some creepy marketing or politician will try to take advantage of us. But when you reflect on people living in countries that are not so free and open-minded, where homosexuality is punished by death, you realize that this is a very serious issue.”

Michal Kosinski is certain that we have entered the post privacy era, and there’s no turning back. And his message is that we need to think about how we want this to work out. “The sooner we start thinking about how to cope, the safer and more hospitable the post privacy era will be.”