AI – a toolbox to support journalism

As artificial intelligence makes its way into editorial products and processes, media organizations face new challenges. They need to find out how to use this new computational toolbox and how it can contribute to creating quality content.

Do you find human-like robots creepy? You wouldn’t be the first to feel that way. ’The Frankenstein Complex’ was introduced in novels by Isaac Asimov already in the late 1940s as a representation of human beings’ intricate relationship to humanoid robots. While coined in science fiction, this term has found its footings in very real scenarios today, based on key areas of concern related to robots replacing our jobs.

The concerns are not unfounded for. There are indeed a wealth of robots (or programmable machines) employed across the globe, rendering many human workers in sectors, such as manufacturing, transportation and healthcare, obsolete. These industries are undergoing rapid transformation through the use of robotics and technologies such as artificial intelligence.

Creators and consumers of news express unease about the potential downsides of AI

These concerns extend into the media industry as well, where both creators and consumers of news express unease about the potential downsides of AI. To deal with these concerns, it is about time that we offer an alternative narrative to the Frankenstein Complex!

We might as well start with the basics. Robots are highly unlikely to enter newsrooms any time soon. What is already there, though, is a great new computational toolbox that can help human reporters and editors create and share high quality news content.

AI technologies are currently used in newsrooms in a myriad of ways, from data mining to automated content production and algorithmic distribution. While the possibilities know no bounds, the applications tend to be geared towards information processing tasks like calculating, prioritizing, classifying, associating or filtering information – sometimes simultaneously.

With recent advances in technological domains such as natural language processing and generation (NLP/NLG), the potential to leverage AI in editorial products and processes is increasing rapidly. In Schibsted, we are currently exploring the use of AI in news work in various ways, such as helping editors decide when to put content behind paywall, supporting journalists in tagging their articles and optimizing something as old school as printed papers in order to maximize sales and minimize waste.

AI learn from the past

The opportunities offered by AI are vast, but the technologies won’t help with every newsroom task. To responsibly leverage the potential of AI, reflecting on the unique traits of humans and machines becomes key.

AI systems are incredible tools for identifying patterns in data. However, this feature also renders AI technologies susceptible to reinforcing biases. And through technology such as face recognition systems and language translations, we have uncovered a key limitation to AI: the learn from the past.

Journalists, on the other hand, shape the future. They introduce new ideas through stories and reporting, often subtly influencing the ways our societies and democracies progress.

In order to recognize the unique skills (and limitations) brought by both sides of a human-machine relationship, we need to equip ourselves with reasonable expectations. We need to stop portraying AI as flawless human-like robots excelling at any task given to them. Instead, we should offer a narrative in which human beings are assisted by computational systems.

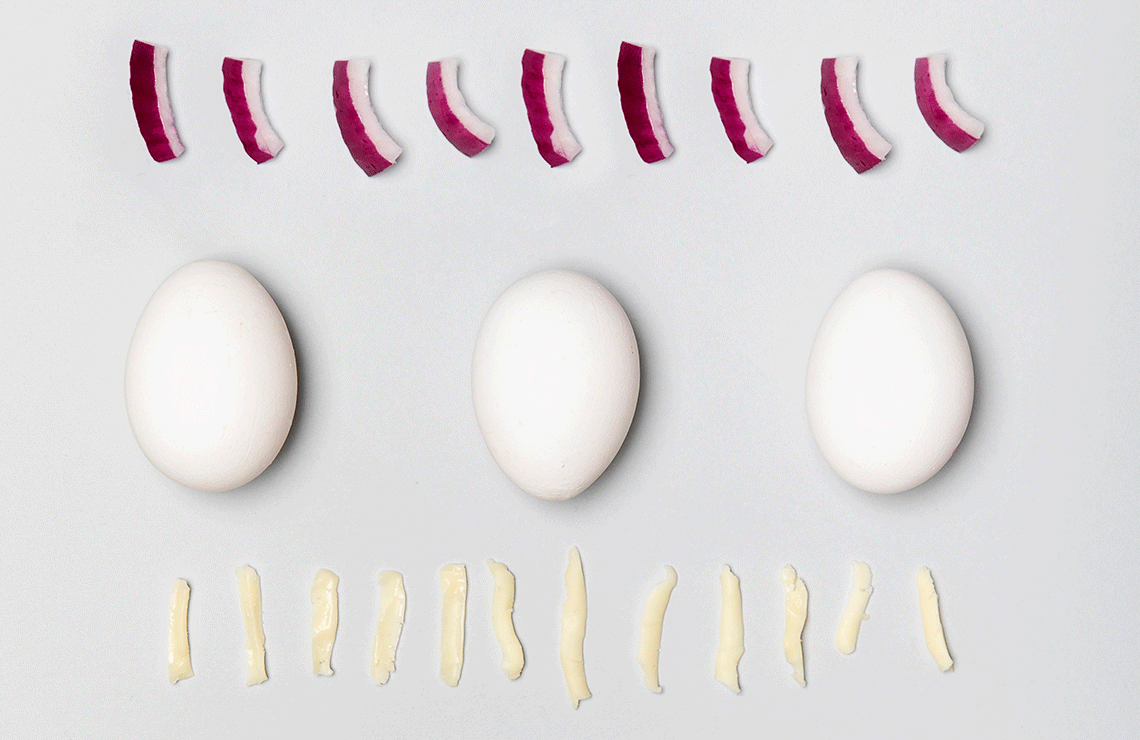

Let’s use a kitchen metaphor. If you are expecting an AI system to bring you a perfect omelet, you are bound to be disappointed. But if you are expecting the AI system to help prepare your ingredients – crack the eggs, grind some cheese, chop an onion – you are more likely to end up with a great lunch. You might have had to pluck some eggshells out of the mix or do a second round of onion chopping, but the overall process was smoother with the help of AI.

Training is needed

The idea of humans and machines working together is a topic gaining traction in academia, not least in the field of journalism where the term hybridization is increasingly used. One way of enabling constructive hybridization is to routinely practice decomposition. This means breaking down big news projects into smaller, more tangible tasks, so that news professionals can more easily identify what can be done by the machine and what requires human expertise.

To get to this point, news professionals should be offered appropriate training and information about the potential and the limitations of AI technologies. Introductory courses such as Elements of AI are a great starting point for anyone looking to familiarize themselves with the terminology. However, news organizations (Schibsted included) need to go beyond that and step up their game in terms of culturally and practically upskillling the workforce, aiming to bridge gaps between historically siloed departments.

We need to bring our full organizations onboard to understand how to responsibly leverage these new technologies. Schibsted is currently part of multiple research efforts at Nordic universities, such as the University of Bergen, NTNU in Trondheim and KTH in Stockholm, where we explore both technological and social aspects of these new technologies. Just as we do in academia, we need to take an interdisciplinary approach when equipping our organization with the skills needed to thrive with AI.

It is time for news organizations to take the lead in the industry’s AI developments

We put ideals such as democracy and fair competition at risk if we allow the global information flow to be controlled – implicitly and explicitly – by a few conglomerate companies. It is time for news organizations to take the lead in the industry’s AI developments. This does not mean that we need to match big tech’s R&D funding (as if that was an option…), but we need increased reflection and engagement regarding how we want AI to impact our industry, organizations, readers, and ultimately, society.

A pressing task for the news media industry is to ensure that AI in newsrooms is optimized for values that support our publishing missions. To do so, we have to stop talking about robots and focus on how newsrooms – and just to be clear, the human beings in them – can benefit from the capabilities of these new technologies. One such attempt can be found in the global industry collaboration JournalismAI run by Polis at the London School of Economics, which Schibsted is part of. There, newsrooms from across the world are joining forces to experiment and test the potential of applying AI to achieve newsroom goals. The collaboration serves as a great illustration of what would make a nice bumper sticker: Power to the Publishers!

Agnes Stenbom

Responsible Data & AI Specialist/Industry PhD Candidate

Years in Schibsted

2,5

What I’ve missed the most during the Corona crisis

Global leadership.